Home

I am a postdoctoral researcher and lecturer at ETH Zurich. My research interests are probability theory (in particular random matrices), statistical physics, and and statistical learning theory.

CV

-

BSc Mathematics

ETH Zürich

-

BSc Mathematics

LMU Munich

-

MSc Theoretical and Mathematical Physics

LMU Munich

-

MASt Mathematics

University of Cambridge

-

PhD Mathematics

IST Austria

-

Industry Sabbatical

Bosch Center for Artificial Intelligence

-

Junior Fellow

ETH Institute for Theoretical Studies

-

SNF Ambizione Fellow

ETH Zurich

Selected research

-

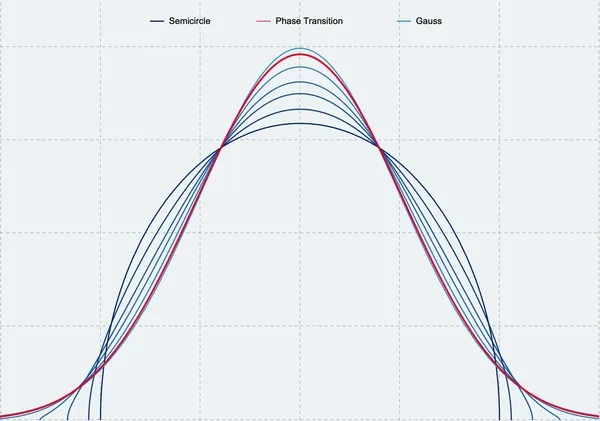

Phase Transition in the Density of States of Quantum Spin Glasses

László Erdős, Dominik Schröder

Math. Phys. Anal. Geom.Vol. 17 (2014) | Issue 3-4

We demonstrate a transition between Gaussian and semicircular laws using q-Hermite polynomials. This work has inspired large amounts of follow-up research on the SYK model for quantum gravity.

-

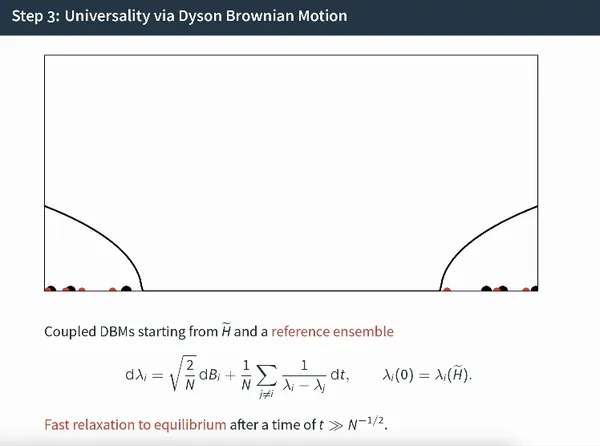

Random matrices with slow correlation decay

László Erdős, Dominik Schröder

Forum Math. SigmaVol. 7 (2017)

We prove universality for a large class of random matrices with correlated entries. This very general result has been used numerous times, also in more applied research.

-

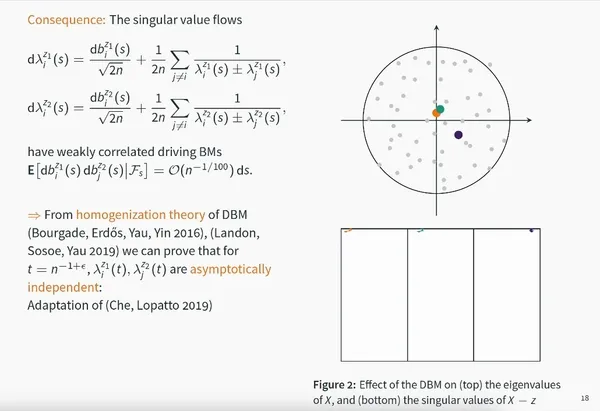

Central limit theorem for linear eigenvalue statistics of non-Hermitian random matrices

Giorgio Cipolloni, László Erdős, Dominik Schröder

Comm. Pure Appl. Math.Vol. 76 (2023)

We show that the linear statistics of random matrices with IID entries asymptotically are a rank-one perturbation of the Gaussian free field on the unit disc.

-

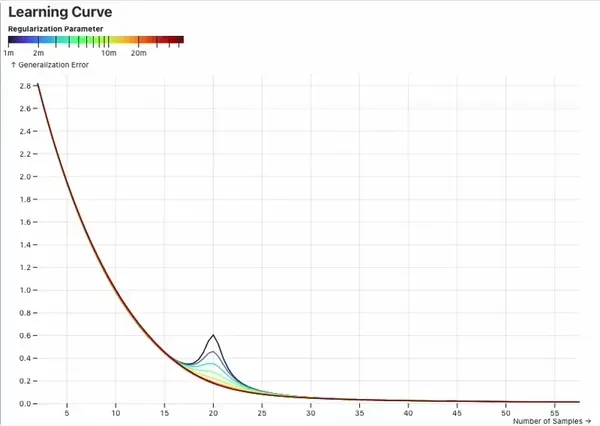

Deterministic equivalent and error universality of deep random features learning

Dominik Schröder, Hugo Cui, Daniil Dmitriev, Bruno Loureiro

ICML(2023)

We show that the generalization error of deep random feature models is the same as the generalization error of Gaussian features with matched covariance, and derive an explicit expression for the generalization error.

-

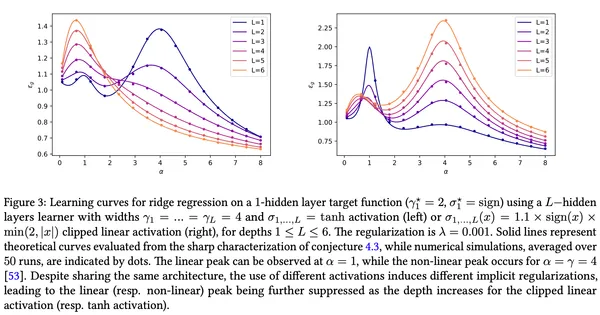

Asymptotics of Learning with Deep Structured (Random) Features

Dominik Schröder, Hugo Cui, Daniil Dmitriev, Bruno Loureiro

Preprint(2024)

We derive an approximative formula for the generalization error of deep neural networks with structured (random) features, confirming a widely believed conjecture. We also show that our results can capture feature maps learned by deep, finite-width neural networks trained under gradient descent.

Selected projects

-

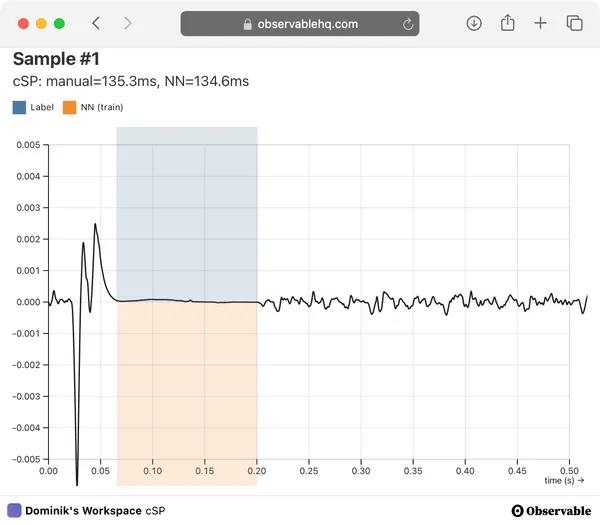

Regression on EEG data using xResnet1d to predict the cortical silent period onset and offset. Work in progress.

Contact

- Email dominik.schroeder[at]math.ethz.ch

- Phone +41 44 633 84 43

- Office HG E 66.1

ETH ZurichRämistrasse 101HG E 66.18092 Zurich