Robotics

Robotics is shifting from rigid machines to adaptable, resilient systems capable of safe interaction with humans and complex environments. Soft robotics, robotic materials, and advanced mechanism design enable robots with compliant, tunable, and bio-inspired structures that better integrate with the physical world. By leveraging programmable materials and physics-based modeling, we can create robots with enhanced adaptability and functionality. This research has broad implications for medical devices, wearable assistive systems, and autonomous exploration, pushing the boundaries of how robots interact with and navigate their surroundings.

A Topology Optimization Approach for Designing Robotic Skins

J. Montes, R. Hinchet, S. Coros, B. Thomaszewski

ACM Transactions on Graphics (Proc. ACM SIGGRAPH Asia 2023)

Soft robotics offers unique advantages in manipulating fragile or deformable objects, human-robot interaction, and exploring inaccessible terrain. However, designing soft robots that produce large, targeted deformations is challenging. In this paper, we propose a new methodology for designing soft robots that combines optimization-based design with a simple and cost-efficient manufacturing process. Our approach is centered around the concept of robotic skins—thin fabrics with 3D-printed reinforcement patterns that augment and control plain silicone actuators. By decoupling shape control and actuation, our approach enables a simpler and cost-efficient manufacturing process. Unlike previous methods that rely on empirical design heuristics for generating desired deformations, our approach automatically discovers complex reinforcement patterns without any need for domain knowledge or human intervention. This is achieved by casting reinforcement design as a nonlinear constrained optimization problem and using a novel, three-field topology optimization approach tailored to fabrics with 3D-printed reinforcements. We demonstrate the potential of our approach by designing soft robotic actuators capable of various motions such as bending, contraction, twist, and combinations thereof. We also demonstrate applications of our robotic skins to robotic grasping with a soft three-finger gripper and locomotion tasks for a soft quadrupedal robot.

Compliant Mechanisms

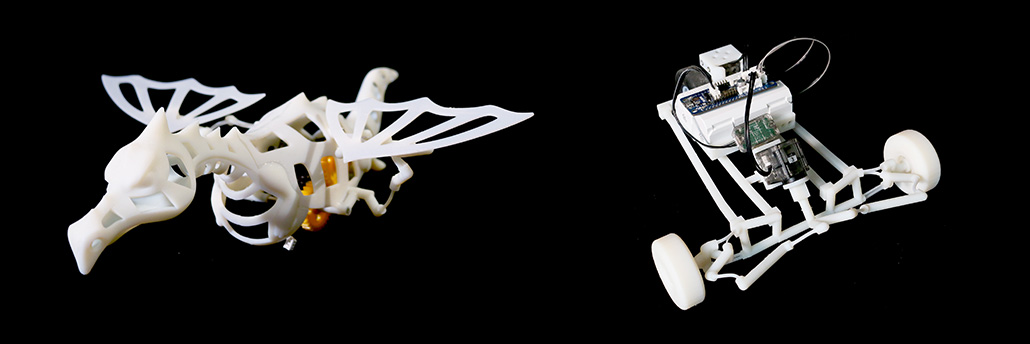

We present a computational tool for designing compliant mechanisms. Our method takes as input a conventional, rigidly-articulated mechanism defining the topology of the compliant design. This input can be both planar or spatial, and we support a number of common joint types which, whenever possible, are automatically replaced with parameterized flexures. As the technical core of our approach, we describe a number of objectives that shape the design space in a meaningful way, including trajectory matching, collision avoidance, lateral stability, resilience to failure, and minimizing motor torque. Optimal designs in this space are obtained as solutions to an equilibrium-constrained minimization problem that we solve using a variant of sensitivity analysis. We demonstrate our method on a set of examples that range from simple four-bar linkages to full-fledged animatronics, and verify the feasibility of our designs by manufacturing physical prototypes.

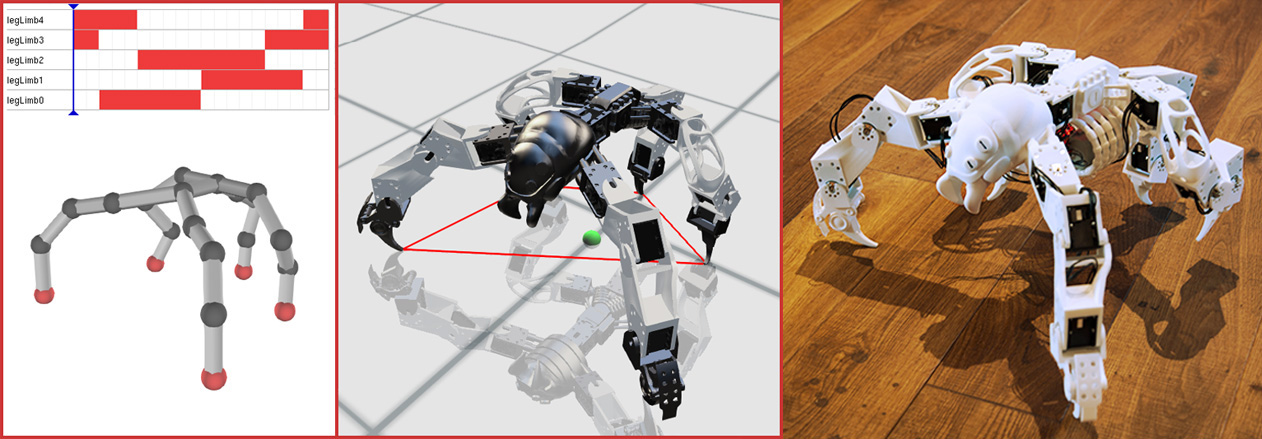

Optimization-Based Gait Design

We present an interactive design system that allows casual users to quickly create 3D-printable robotic creatures. Our approach automates the tedious parts of the design process while providing ample room for customization of morphology, proportions, gait and motion style. The technical core of our framework is an efficient optimization-based solution that generates stable motions for legged robots of arbitrary designs. An intuitive set of editing tools allows the user to interactively explore the space of feasible designs and to study the relationship between morphological features and the resulting motions. Fabrication blueprints are generated automatically such that the robot designs can be manufactured using 3D-printing and off-the-shelf servo motors. We demonstrate the effectiveness of our solution by designing six robotic creatures with a variety of morphological features: two, four or five legs, point or area feet, actuated spines and different proportions. We validate the feasibility of the designs generated with our system through physics simulations and physically-fabricated prototypes.

Synergies between Wheels and Legs

We present a computation-driven approach to design optimization and motion synthesis for robotic creatures that locomote using arbitrary arrangements of legs and wheels. Through an intuitive interface, designers first create unique robots by combining different types of servomotors, 3D printable connectors, wheels and feet in a mix-and-match manner. With the resulting robot as input, a novel trajectory optimization formulation generates walking, rolling, gliding and skating motions. These motions emerge naturally based on the components used to design each individual robot.We exploit the particular structure of our formulation and make targeted simplifications to significantly accelerate the underlying numerical solver without compromising quality. This allows designers to interactively choreograph stable, physically-valid motions that are agile and compelling. We furthermore develop a suite of user-guided, semi-automatic, and fully-automatic optimization tools that enable motion-aware edits of the robot’s physical structure. We demonstrate the efficacy of our design methodology by creating a diverse array of hybrid legged/wheeled mobile robots which we validate using physics simulation and through fabricated prototypes.