Detail-Preserving Fluid Simulation

Advection-projection methods for fluid animation are widely appreciated for their stability and efficiency. However, the projection step dissipates energy from the system, leading to artificial viscosity and suppression of small-scale details. We propose an alternative approach for detail-preserving fluid animation that is surprisingly simple and effective. We replace the energy-dissipating projection operator applied at the end of a simulation step by an energy-preserving reflection operator applied at mid-step.We show that doing so leads to two orders of magnitude reduction in energy loss, which in turn yields vastly improved detail-preservation. We evaluate our reflection solver on a set of 2D and 3D numerical experiments and show that it compares favorably to state-of-the-art methods. Finally, our method integrates seamlessly with existing projection-advection solvers and requires very little additional implementation.

Art-Directable Hair Simulation

We present a method for adding artistic control to physics-based hair simulation. Taking as input an animation of a coarse set of guide hairs, we constrain a subsequent higher-resolution simulation of detail hairs to follow the input motion in a spatially-averaged sense. The resulting high-resolution motion adheres to the artistic intent but is enhanced with detailed deformations and dynamics generated by physics-based simulation. The technical core of our approach is formed by a set of tracking constraints, requiring the center of mass of a given subset of detail hair to maintain its position relative to a reference point on the corresponding guide hair. As a crucial element of our formulation, we introduce the concept of dynamically changing constraint targets that allow reference points to slide along the guide hairs to provide sufficient flexibility for natural deformations. We furthermore propose to regularize the null space of the tracking constraints based on variance minimization, effectively controlling the amount of spread in the hair. We demonstrate the ability of our tracking solver to generate directable yet natural hair motion on a set of targeted experiments and show its application to production-level animations.

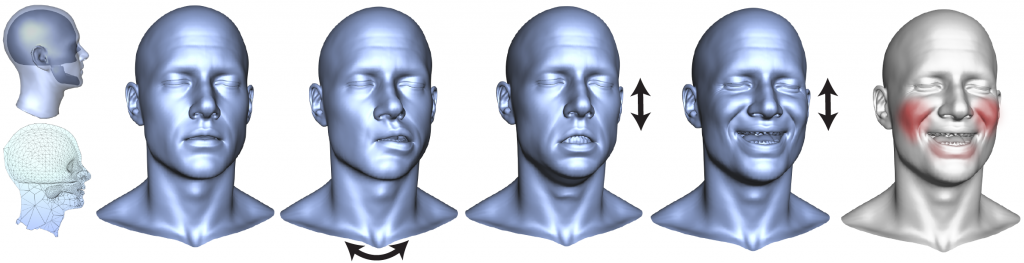

Physics-Enhanced Facial Animation

Oftentimes facial animation is created separately from overall body motion. Since convincing facial animation is challenging enough in itself, artists tend to create and edit the face motion in isolation. Or if the face animation is derived from motion capture, this is typically performed in a mo-cap booth while sitting relatively still. In either case, recombining the isolated face animation with body and head motion is non-trivial and often results in an uncanny result if the body dynamics are not properly reflected on the face (e.g. the bouncing of facial tissue when running). We tackle this problem by introducing a simple and intuitive system that allows to add physics to facial blendshape animation. Unlike previous methods that try to add physics to face rigs, our method preserves the original facial animation as closely as possible. To this end, we present a novel simulation framework that uses the original animation as per-frame rest-poses without adding spurious forces. As a result, in the absence of any external forces or rigid head motion, the facial performance will exactly match the artist-created blendshape animation. In addition we propose the concept of blendmaterials to give artists an intuitive means to account for changing material properties due to muscle activation. This system allows to automatically combine facial animation and head motion such that they are consistent, while preserving the original