Previously, I imported pronunciations of a bunch of characters from Wiktionary using resources from the Wikihan project. But then I thought, why not just simply all of Wiktionary?

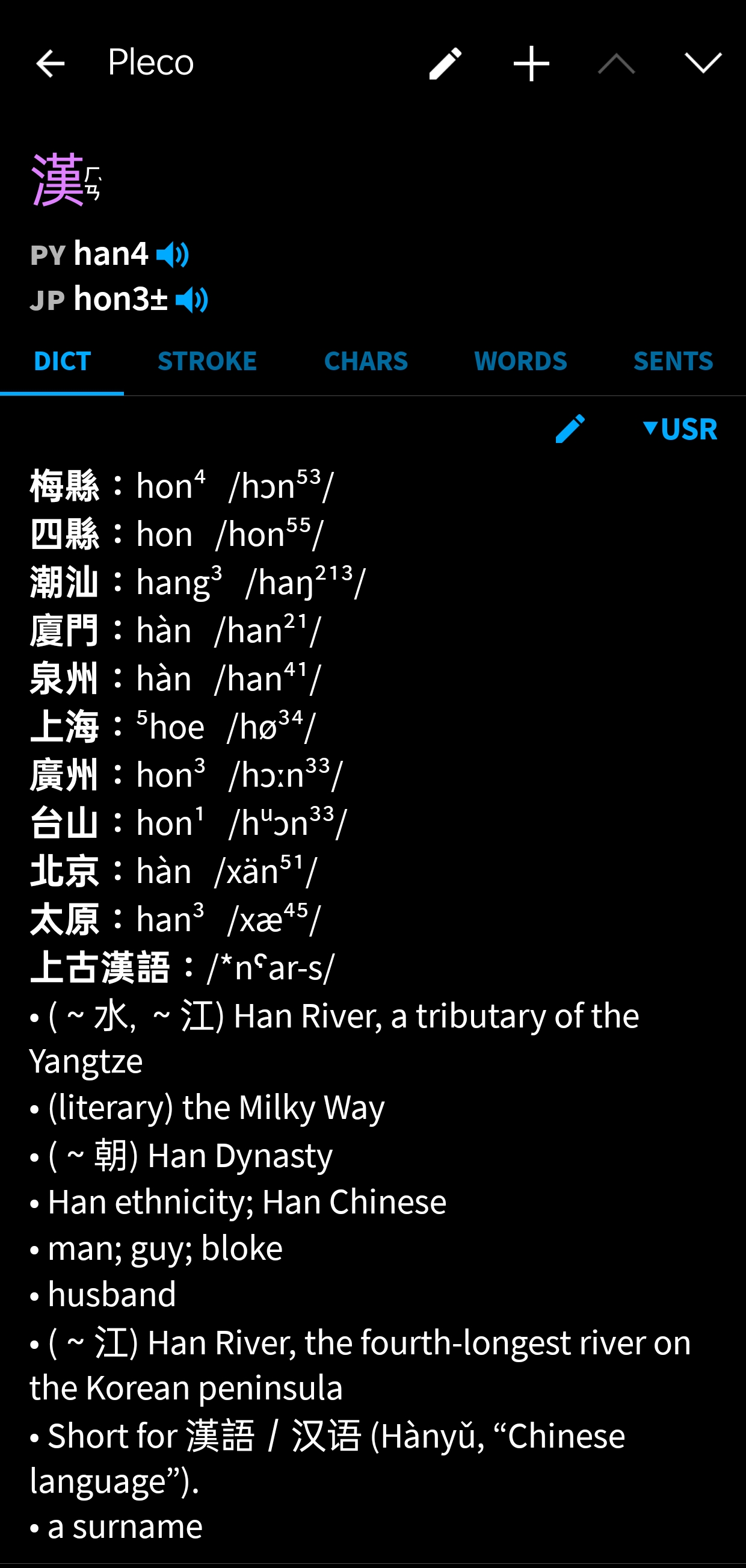

After some digging around, I found a project called Kaikki, which generates JSON files containing Wiktionary data for various different languages. The one that contains all Chinese words was 670MB! I then wrote a Python script to convert all of them into Pleco's dictionary format. This results in a dictionary with over 170000 entries, so it takes a while to import1. Below is a screenshot of the result.

Why stop there? Another Chinese dictionary is 漢典, which aggregates information from many other dictionaries like 國語辭典, 康熙字典, 说文 解字, and information like stroke order, and 五筆, 倉頡, 鄭碼 input codes. But the most relevant for this blog post is the 音韻方言 part. It is much more detailed then the data available on Wiktionary; it goes all the way and pin-points the town corresponding to a particular pronunciation, and it even lists the sources. In my experience it also has better support for topolects that aren't Mandarin or Cantonese. For example, for the character 窨, which I have never even seen before, it has pronunciations in five different topolects, whereas Wiktionary only has pronunciations three.

The problem with 漢典 is that there aren't any premade JSON files, nor does it offer an API for fetching data. So my only option was to scrape the website, which is considerably slower than importing from a local file, but in the end, it works.

Be sure to enable the "Unsafe mode" when importing, otherwise it will probably take days to complete.↩︎