Deep Multi-Modal Autoencoders for Robotic Scene Understanding

We explore the capabilities of Auto-Encoders to fuse the information available from cameras and depth sensors, and to reconstruct missing data, for scene understanding tasks. In particular we consider three input modalities: RGB images; depth images; and semantic label information. We seek to generate complete scene segmentations and depth maps, given images and partial and/or noisy depth and semantic data. We formulate this objective of reconstructing one or more types of scene data using a Multi-modal stacked Auto-Encoder. We show that suitably designed Multi-modal Auto-Encoders can solve the depth estimation and the semantic segmentation problems simultaneously, in the partial or even complete absence of some of the input modalities. We demonstrate our method can run in real time (i.e., less than 40ms per frame). We also show that our method has a significant advantage over other methods in that it can seamlessly use additional data that may be available, such as a sparse point-cloud and/or incomplete coarse semantic labels.A video describing our approach is here

A very preliminar matlab demo implementation can be downloaded here

Semantic Segmentation for Robotic Systems

The semantic mapping of the environment requires simultaneous segmentation and categorization of the acquired stream of sensory information. The existing methods typically consider the semantic mapping as the final goal and differ in the number and types of considered semantic categories. We envision semantic understanding of the environment as an on-going process and seek representations which can be refined and adapted depending on the task and robot's interaction with the environment.The proposed approach uses the Conditional Random Field framework to infer the semantic categories in a scene (e.g. ground, structure, furniture and props categories in indoors or ground, sky, building, vegetation and objects in outdoors). Using visual and 3D data a novel graph structure and effective set of features are exploited for efficient learning and inference, obtaining better or comparable results at the fraction of computational cost, in publicly available RGB-D and vision and 3D lidar sensors datasets. The chosen representation naturally lends itself for on-line recursive belief updates with a simple soft data association mechanism, and can seamlessly integrate evidence from multiple sensors with overlapping but possibly different fields of view (FOV), account for missing data and predict semantic labels over the spatial union of sensors coverages.

The describing our approach are here

A very preliminar matlab implementation can be downloaded here

Robust Place Recognition Over Time

The ability to detect failures and reconsider information over time is crucial for long term robust autonomous robot applications. This applies to loop closure decisions in localization and mapping systems. We describe a method to analyze all available information up to date in order to robustly remove past incorrect loop closures from the optimization process. We propose a novel consistency based method to extract the loop closure regions that agree both among themselves and with the robot trajectory over time. The method, called RRR, realizes and removes the incorrect information thus recovering the correct estimation. The RRR algorithm can efficiently solve the inconsistencies in both batch and incremental way. Even more, it is able to handle multi-session, spatially related or unrelated experiments. We support the RRR algorithm, on well-known odometry systems, e.g. visual or laser, using the very efficient graph optimization framework g2o as back-end.The RRR algorithm is available in https://github.com/ylatif/rrr

|

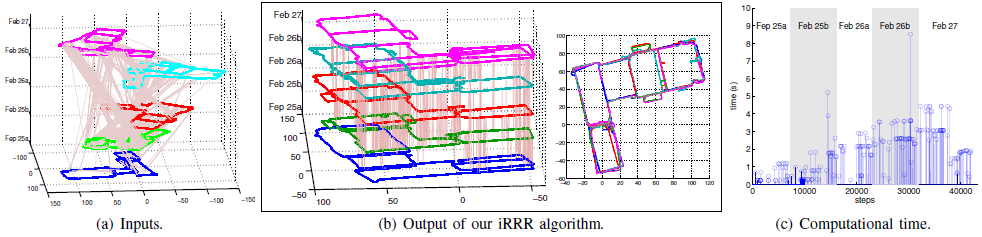

| (a)The input; odometry (each session is a different color and height) and loop closures (pink). (b)The output of our proposal, each session on different height (left) and the floor-view (right). Bicocca multi-session experiment from the RAWSEEDS project, odometry from laser scan matching and the loop closures from a BoW+gc place recognition system. Each session is in its own frame of reference. (c)The computational time of our proposed method against the step when is triggered. |

Place Recognition with Conditional Random Fields

We present a place recognition algorithm for SLAM systems using stereo cameras that considers both appearance and geometric information. Both near and far scene points provide information for the recognition process. Hypotheses about loop closings are generated using a fast appearance technique based on the bag-of-words (BoW) method. Loop closing candidates are evaluated in the context of recent images in the sequence. In cases where similarity is not sufficiently clear, loop closing verification is carried out using a method based on Conditional Random Fields (CRFs). We compare our system with the state of the art using visual indoor and outdoor data from the RAWSEEDS project, and a multisession outdoor dataset obtained at the MIT campus. Our system achieves higher recall (less false negatives) for full precision (no false positives), as compared with the state of the art. It is also more robust to changes in appearance of places because of changes in illumination (different shadow configurations in different days or time of day).

|

|

|

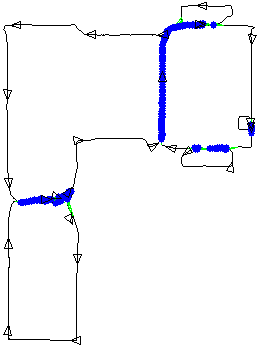

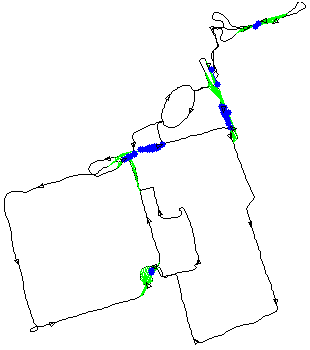

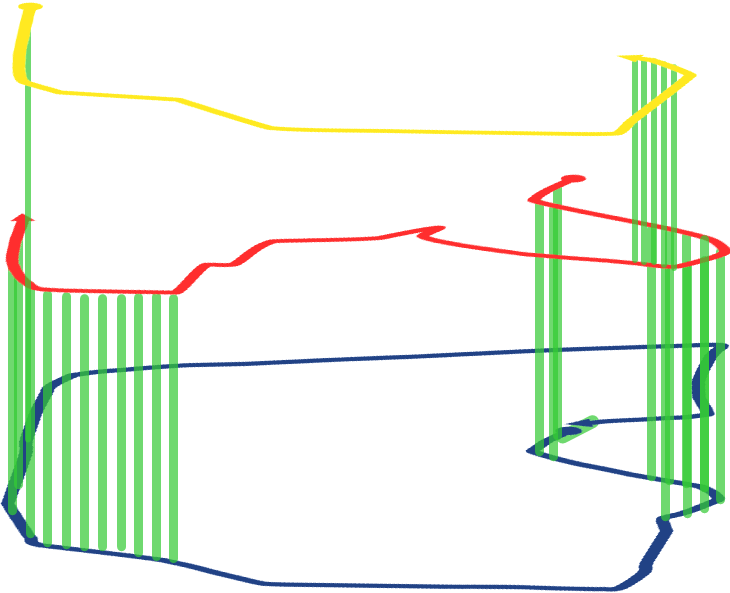

| Here, our place recognition system on an indoor dataset in the Bicocca campus (mpg file). Black lines and triangles denote the trajectory of the robot; light green lines, actual loops, deep blue lines denote true loops detected. The precision on this dataset was of 100% and the recall of 58.21%. | Here, our place recognition system on an outdoor dataset in the Bovisa campus (mpg file). Black lines and triangles denote the trajectory of the robot; light green lines, actual loops, deep blue lines denote true loops detected. The precision on this dataset was of 100% and the recall was 11.15%. | Here, our place recognition system on a multisession dataset in the MIT campus (mpg file). The precision on this dataset was of 100% and the recall was 38.27%. |

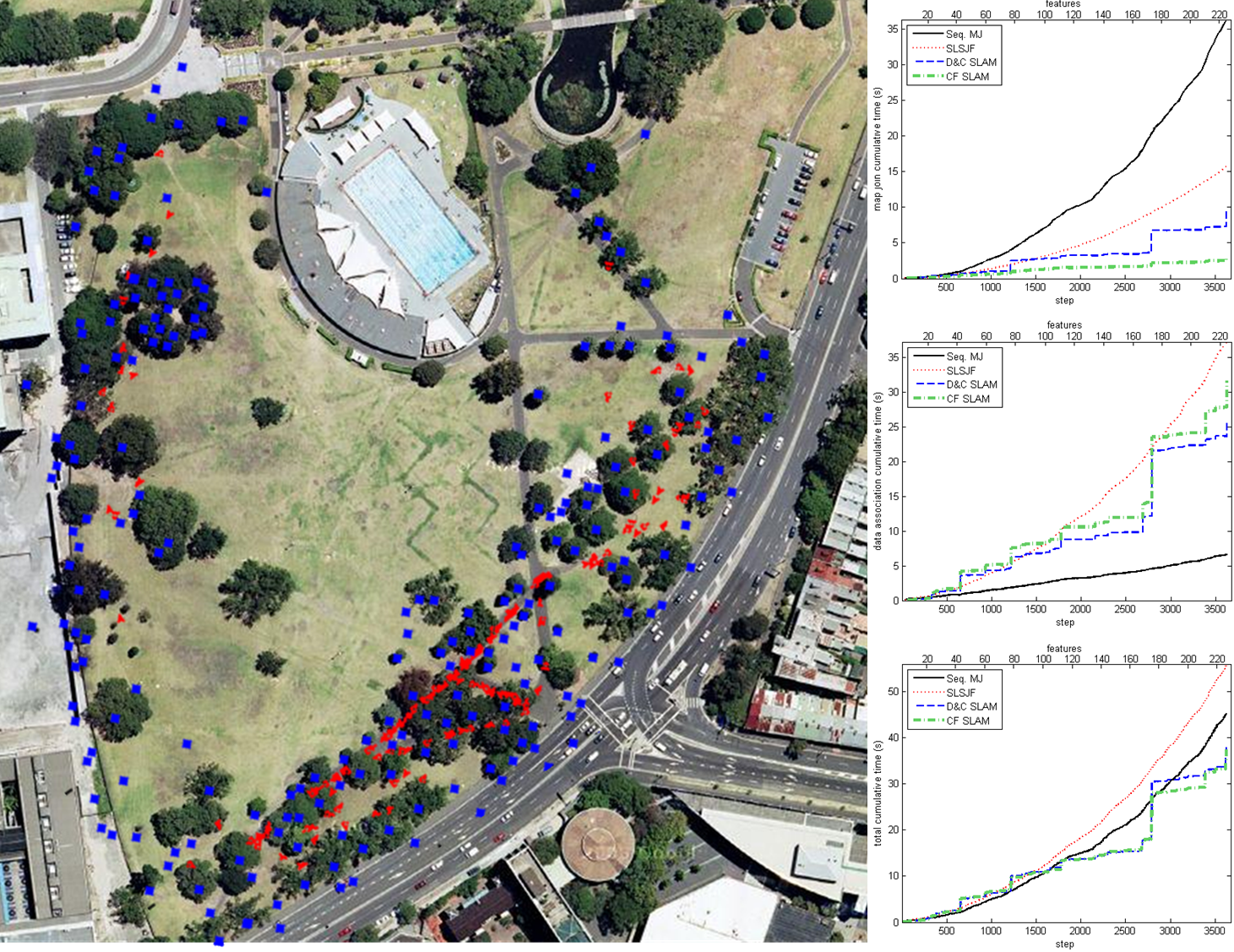

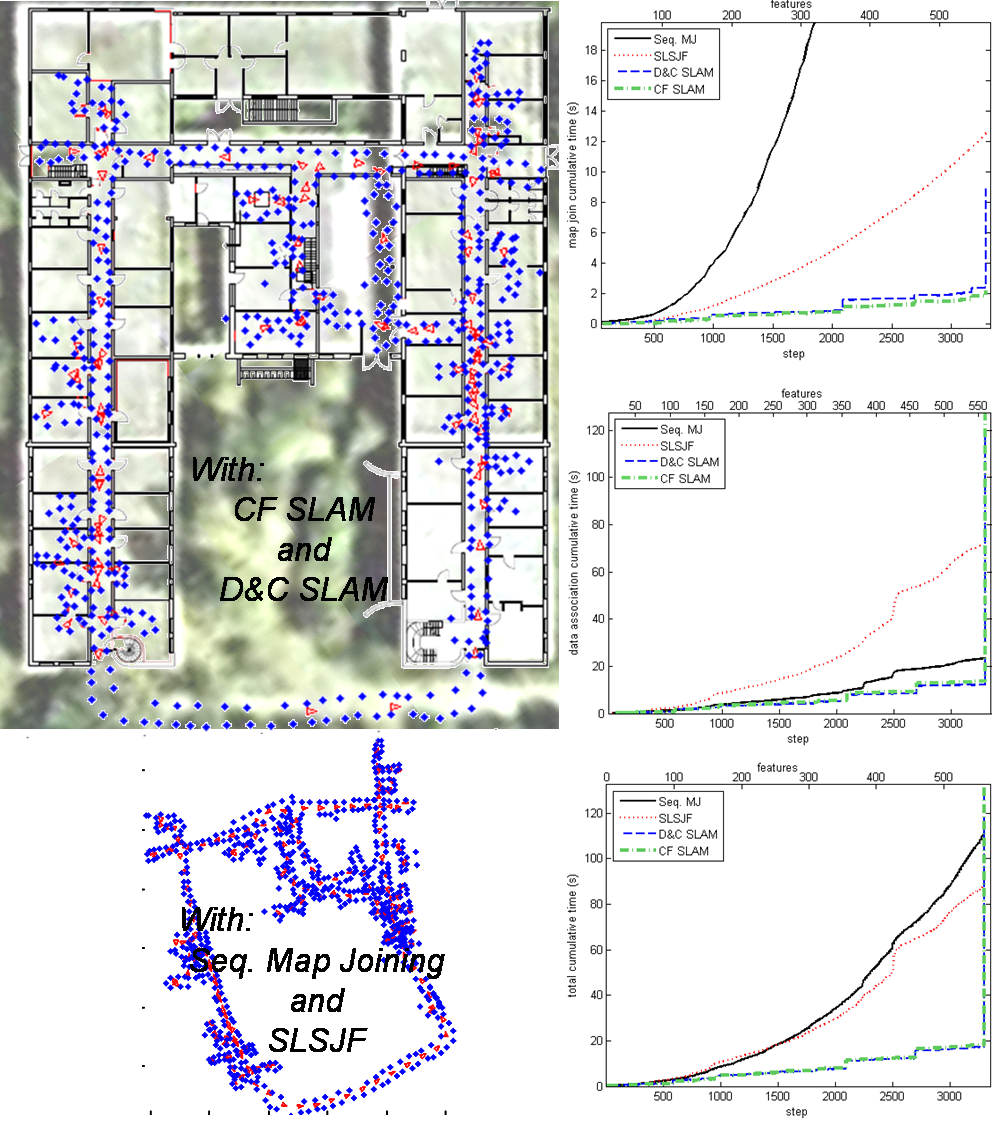

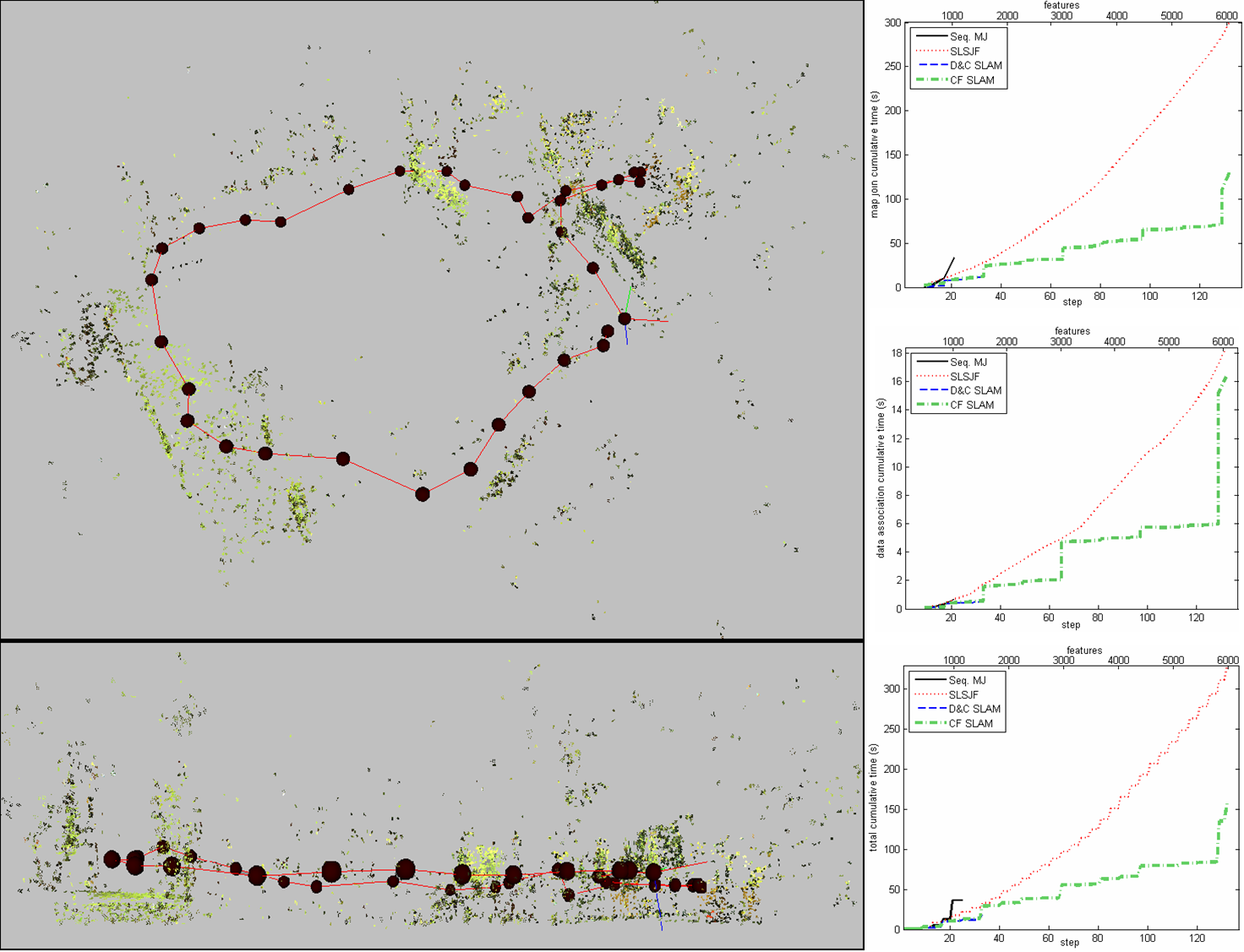

The Combined Kalman-Information Filter as an Efficient Large Scale SLAM Algorithm

The Combined Filter (CF), a judicious combination of Extended Kalman (EKF) and Extended Information filters (EIF), can be used to execute highly efficient Simultaneous Localization And Mapping (SLAM) problem in large environments. There are no restrictions on the topology of the environment and trajectory. With the CF filter updates can be executed in as low as O(log n) as compared with other EKF and EIF based algorithms: O(n2) for Map Joining SLAM, O(n) for Divide and Conquer (D&C) SLAM, and O(n1.5) for the Sparse Local Submap Joining Filter (SLSJF)We can provide data association based on stochastic geometry that makes the CF SLAM algorithm as efficient as the most efficient algorithm that computes covariance matrices, with far less memory requirements. If appearance information is available for data association, then CF can clearly outperform all other algorithms based on EKFs and EIFs.

|

|

|

| On the left, CF SLAM execution on the Victoria Park data set (avi file), and on the right, cumulative execution times for some SLAM algorithms. | On the left, SLAM algorithms execution on the DLR-Spatial-Cognition data set (avi file), and on the right, cumulative execution times. | CF SLAM execution on the Visual Stereo data (avi file), and on the right, cumulative execution times for some SLAM algorithms. |

Research Projects

| NCCR Robotics: The National Centre of Competence in Research (NCCR) Robotics is a Swiss nationwide organisation funded by the Swiss National Science Foundation pulling together top researchers from all over the country with the objective of developing new, human oriented robotic technology for improving our quality of life. The Centre was opened on 1st December 2010, and binds together experts from five world-class research institutions; École Polytechnique Fédérale de Lausanne (EPFL) (leading house), Eidgenössische Technische Hochschule Zürich (ETH Zurich) (co-leading house), Universität Zürich (UZH), Istituto Dalle Molle di Studi sull’Intelligenza Artificiale (IDSIA) Lugano and University of Bern (UNIBE) for a period of up to 12 years. |  |

| UP-Drive: Automated Urban Parking and Driving. This project has received funding from the European Union Horizon 2020 research and innovation programme under grant agreement 688652, addressing Objective ICT-24-2015: ICT 2015 – Information and Communications Technologies: Robotics. UP-Drive project runs from January 1, 2016 through December 31, 2019. |  |

| TRADR: Long-Term Human-Robot Teaming for Robot Assited Disaster Response. European research project funded by the EU FP7 Programme, ICT: Cognitive systems, interaction, robotics (Project Nr. 609763) in the area of robot-assisted disaster response (disaster robotics). |  |

| Active SLAM by Cooperative Sensors in Large Scale Environments (funded by MEC, DPI2009-13710, 2010-2012) | |

| RAWSEEDS: Robotics Advancement through Web-publishing of Sensorial and Elaborated Extensive Data Sets (SSA-045144) (financed by the European Union 6th Framework Program, 2006-2009). |  |

| SLAM6DOF: Portable Simultaneous Localization and Mapping Systems for Large and Complex Environments (funded by MEC, DPI2006-13578, 2007-2009) |