Chapter 5 Comparing treatment groups by linear contrasts

We stay with our drug example, with four drugs \(D1\), \(D2\), \(D3\), \(D4\) randomly assigned to \(n=8\) mice each. Having found strong evidence that the average enzyme levels differ between the four drugs, we now develop methods to further investigate how they differ. In addition to the four drugs, we also want to study their two drug classes, where \(D1\) and \(D2\) share a main component (we call it ‘Class A’), and \(D3\) and \(D4\) share another main component (‘Class B’). We start by studying a general framework for estimating and testing multiple comparisons of arbitrary sets of treatment groups in Section 5.1. Testing multiple hypotheses simultaneously leads to a multiplicity problem that requires adjusting confidence and significance levels and we discuss several methods in Section 5.2. We also look into a further example that highlights some of the intricacies encountered in real-life experiments (Section 5.3.

5.1 Linear contrasts

The omnibus \(F\)-test appraises the evidence against the hypothesis of identical group means, but a rejection of this omnibus null hypothesis provides little information about which groups differ and how. A very general and elegant method for evaluating treatment group differences are linear contrasts. If a set of contrasts is orthogonal, then we can reconstitute the result of the \(F\)-test using the results from the contrasts, and a significant \(F\)-test implies that at least one contrast is significant. If the \(F\)-test is not significant, we might still find significant contrasts, because the \(F\)-test considers all deviations from equal group means simultaneously, while a contrast looks for a specific set of deviations and therefore provides more power while ignoring all other potential deviations.

For our example, we might be interested in comparing the two drugs \(D1\) and \(D2\), for example. One way of doing this is by a simple \(t\)-test between the corresponding observations. This yields a \(t\)-value of \(t=\) 2.22 and a \(p\)-value of \(p=\) 0.044 for a difference of \(\hat{\mu}_1-\hat{\mu}_2=\) 1.52 with a 95%-confidence interval of [0.05, 2.98]. While this approach yields a valid estimate and test, it is inefficient because we completely neglect the information available in the observations of drugs \(D3\) and \(D4\). Specifically, if we assume that the variances are the same in all treatment groups, we could use these additional observations to get a better estimate of the residual variance \(\sigma^2\) and increase the degrees of freedom.

We illustrate the use and advantages of linear contrasts using three example comparisons

- as before, compare the drugs in the first class:

D1versusD2; - compare the drugs in the second class:

D3versusD4; - compare the two classes: average of

D1andD2versus average ofD3andD4,

which we translate into the following contrasts between group means: \[\begin{align*} \text{D1 vs D2} &: \mu_1-\mu_2 \\ \text{D3 vs D4} &: \mu_3-\mu_4 \\ \text{Class A vs Class B} &: \left(\frac{\mu_1+\mu_2}{2}\right)-\left(\frac{\mu_3+\mu_4}{2}\right)\;. \end{align*}\] The first two contrasts could easily be estimated and tested using the two-group \(t\)-test framework. For the third contrast, this method would require manual calculation of the corresponding estimates and their standard errors.

Estimating linear contrasts is a large part in the analysis of experimental data. A main task in designing an experiment is to ensure that contrasts of interest are defined beforehand and the experimental design allows their estimation with adequate precision. In other words,

Contrasts of interest justify the design, not the other way around.

5.1.1 Defining contrasts

Formally, a linear contrast \(\Psi(\mathbf{w})\) for a treatment factor with \(k\) levels is a linear combination of the group means using a weight vector \(\mathbf{w}=(w_1,\dots,w_k)\): \[\begin{equation} \Psi(\mathbf{w}) = \mu_1\cdot w_1+\cdots +\mu_k\cdot w_k \tag{5.1}\;, \end{equation}\] where the entries in the weight vector sum to zero, such that \(w_1+\cdots +w_k=0\).

We compare the group means of two sets \(A\) and \(B\) of treatment factor levels by selecting the weights \(w_i\) as follows:

- the weight of each treatment level not considered is zero: \(w_i=0\iff i\not\in A\) and \(i\not\in B\);

- the weights for set \(A\) are all positive: \(w_i>0\iff i\in A\);

- the weights for set \(B\) are all negative: \(w_i<0\iff i\in B\);

- the weights sum to zero: \(w_1+\cdots +w_k=0\);

- the individual weights \(w_i\) determine how the group means of the sets \(A\) and \(B\) are averaged; using equal weights for each set corresponds to a simple average of the set’s group means.

The corresponding weight vectors for our example contrasts are \(\mathbf{w}_1=(+1,-1,0,0)\) for the first contrast, where \(A=\{1\}\) and \(B=\{2\}\); \(\mathbf{w}_2=(0,0,+1,-1)\) for the second, \(A=\{3\}\) and \(B=\{4\}\); and \(\mathbf{w}_3=(+1/2, +1/2, -1/2, -1/2)\) for the third contrast, where \(A=\{1,2\}\) and \(B=\{3,4\}\).

5.1.2 Estimating contrasts

We estimate a contrast by replacing the group means in (5.1) by their estimates: \[ \hat{\Psi}(\mathbf{w}) = \hat{\mu}_1\cdot w_1+\cdots +\hat{\mu}_k\cdot w_k\;. \] The estimate can alternatively be written in terms of the model parameter estimates \(\hat{\mu}\) and \(\hat{\alpha}_i\).

The estimation, testing, and interpretation of linear contrasts do not suffer from unbalancedness, and we can make our exposition more general by allowing different numbers of samples per group, denoting by \(n_i\) the number of samples in group \(i\). From the properties of the group mean estimates \(\hat{\mu}_i\), we know that \(\hat{\Psi}(\mathbf{w})\) is an unbiased estimator of the contrast \(\Psi(\mathbf{w})\) and has variance \[ \text{Var}\left(\hat{\Psi}(\mathbf{w})\right) = \text{Var}\left(\hat{\mu}_1\cdot w_1+\cdots \hat{\mu}_k\cdot w_k\right) = \sum_{i=1}^k w_i^2 \cdot\text{Var}(\hat{\mu}_i) = \sigma_e^2\cdot\sum_{i=1}^k \frac{w_i^2}{n_i}\;. \] We can thus estimate its standard error by \[ \widehat{\text{se}}(\hat{\Psi}(\mathbf{w})) = \hat{\sigma}_e\cdot\sqrt{\sum_{i=1}^k\frac{w_i^2}{n_i}}\;. \]

For the estimate of our third example contrast, we calculate a standard error of \[ \widehat{\text{se}}(\hat{\Psi}(\mathbf{w}_3)) = \hat{\sigma}_e\cdot\sqrt{\left(\frac{(0.5)^2}{8}+\frac{(0.5)^2}{8}+\frac{(-0.5)^2}{8}+\frac{(-0.5)^2}{8}\right)}=\sqrt{\frac{1}{8}}\cdot\hat{\sigma}_e\approx 0.35\cdot\hat{\sigma}_e\;. \]

We already have an estimate \(\hat{\sigma}_e=\) 1.22 of the residual standard deviation: it is the root of the residual mean squares. This yields a contrast estimate of \(\hat{\Psi}(\mathbf{w}_3)=\) 4.28, which is the difference between the average enzyme levels for a class A and class B drug, and a standard error of \(\widehat{\text{se}}(\hat{\Psi}(\mathbf{w}_3))=\,\) 0.43.

The estimate of a contrast is based on the normally distributed estimates of the group means. Since we also estimate the residual variance, the resulting estimator for any contrast has a \(t\)-distribution with \(N-k\) degrees of freedom. This immediately yields a \((1-\alpha)\)-confidence interval for a contrast estimate \(\hat{\Psi}(\mathbf{w})\): \[ \hat{\Psi}(\mathbf{w}) \pm t_{\alpha/2, N-k}\cdot \widehat{\text{se}}\left(\hat{\Psi}(\mathbf{w})\right) = \hat{\Psi}(\mathbf{w}) \pm t_{\alpha/2, N-k}\cdot\hat{\sigma}_e\cdot\sqrt{\sum_{i=1}^k\frac{w_i^2}{n_i}}\;, \] where again \(t_{\alpha/2, N-k}\) is the \(\alpha/2\)-quantile of the \(t\)-distribution with \(N-k\) degrees of freedom (\(N\) the total number of samples). If the degrees of freedom are sufficiently large, we can alternatively calculate the confidence interval based on the normal quantiles by replacing the quantile \(t_{\alpha/2, N-k}\) with \(z_{\alpha/2}\).

For our third example contrast, we calculate a \(t\)-based 95%-confidence interval of [3.4, 5.16], based on the \(t\)-quantile \(t_{0.025,28}=\) -2.05 for \(N-k=\) 28 degrees of freedom. The confidence interval only contains positive values and we can therefore conclude that drugs in class A have indeed a higher enzyme level than those in class B and the observed difference between the classes is not likely due to random fluctuations in the data.

Equivalent calculations for the remaining two example contrasts \(\Psi(\mathbf{w}_1)\) and \(\Psi(\mathbf{w}_2)\) yield the estimates and 95%-confidence intervals of Table 5.1. The results suggest that the two drugs D1 and D2 in class A do lead to different enzyme levels, if only slightly so. Note that the estimate for this contrast is identical to our \(t\)-test result, but the confidence interval is substantially narrower; this is the result of pooling the data for estimating the residual variance and increasing the degrees of freedom. The two drugs in class B cannot be distinguished based on the data.

| Comparison | Contrast | Estimate | LCL | UCL |

|---|---|---|---|---|

D1-vs-D2 |

\(\Psi(\mathbf{w}_1)=\mu_1-\mu_2\) | 1.51 | 0.27 | 2.76 |

D3-vs-D4 |

\(\Psi(\mathbf{w}_2)=\mu_3-\mu_4\) | -0.1 | -1.34 | 1.15 |

Class A-vs-Class B |

\(\Psi(\mathbf{w}_3)=\frac{\mu_1+\mu_2}{2}-\frac{\mu_3+\mu_4}{2}\) | 4.28 | 3.4 | 5.16 |

5.1.3 Testing contrasts

A linear contrast estimate has a \(t\)-distribution for normally distributed response values. This allows us to derive a \(t\)-test for testing the null hypothesis \[ H_0: \Psi(\mathbf{w}) = 0 \] using the test statistic \[ T = \frac{\hat{\Psi}(\mathbf{w})}{\widehat{\text{se}}(\hat{\Psi}(\mathbf{w}))} = \frac{\sum_{i=1}^k w_i\hat{\mu}_i}{\hat{\sigma}_e\cdot \sqrt{\sum_{i=1}^kw_i^2/n_i}}\;, \] which has a \(t\)-distribution with \(N-k\) degrees of freedom if \(H_0\) is true.

For our second example contrast, the \(t\)-statistic is

\[

T = \frac{(0)\cdot \hat{\mu}_1 + (0)\cdot \hat{\mu}_2 + (+1)\cdot\hat{\mu}_3\,+\,(-1)\cdot\hat{\mu}_4}{\hat{\sigma}_e\cdot \sqrt{\frac{(0)^2+(0^2)+(+1)^2+(-1)^2}{8}}} = \frac{\hat{\mu}_3-\hat{\mu}_4}{\sqrt{2}\hat{\sigma_e}/\sqrt{8}}\;.

\]

This is exactly the statistic for a two-sample \(t\)-test, but uses a pooled variance estimate over all treatment groups and \(N-k=\) 28 degrees of freedom. For our data, we calculate \(t=\) -0.16 and \(p=\) 0.88; the enzyme levels for drugs D3 and D4 cannot be distinguished. Equivalent calculations for all three example contrasts are summarized in Table 5.2. Note again that for our first contrast, the \(t\)-value is abotu 10% larger than with the \(t\)-test based on the two groups alone, and the corresponding \(p\)-value is about one-half.

| Comparison | Test | \(t\) | se | \(p\)-value |

|---|---|---|---|---|

D1-vs-D2 |

\(H_0: \mu_1-\mu_2=0\) | 2.49 | 0.61 | 0.02 |

D3-vs-D4 |

\(H_0:\mu_3-\mu_4=0\) | -0.16 | 0.61 | 0.88 |

Class A-vs-Class B |

\(H_0:\frac{\mu_1+\mu_2}{2}-\frac{\mu_3+\mu_4}{2}=0\) | 9.96 | 0.43 | 0 |

The distribution of a contrast estimate is based on \(N-k\) degrees of freedom, because we use the available information from all groups for estimating the residual standard deviation \(\sigma_e^2\), regardless of the groups that actually appear in the contrast. Estimating and testing a contrast between two group means is therefore more precise and powerful than isolating the data for the two groups and calculating a \(t\)-test based on this data subset. For example, with \(k=4\) treatment groups and \(n=4\) samples per group, a contrast between two treatment levels is based on \(4\cdot 4-4=12\) degrees of freedom, and the corresponding 2.5% and 97.5% \(t\)-quantiles are -2.18 and 2.18, respectively. Ignoring everything but the data in the two groups, a two-sample \(t\)-test has \(2\cdot 4-2=6\) degrees of freedom, and the corresponding 2.5% and 97.5% \(t\)-quantiles are -2.45 and 2.45, respectively, about 12% larger. This widens the confidence intervals and decreases the rejection region.

5.1.4 Using contrasts in R

The emmeans package again provides for the heavy lifting: we calculate the analysis of variance using aov(), estimate the group means using emmeans(), and define a list of contrasts which we estimate using contrast().

For our three example contrasts, the following code performs all calculations in just four commands:

# Calculate the analysis of variance

m = aov(y~drug, data=d)

# Estimate group means of treatment factor

em = emmeans(m, ~drug)

# Define a list of contrasts

# use R's nice features for

# providing readable names

ourContrasts = list(

"D1-vs-D2"=c(1,-1,0,0),

"D3-vs-D4"=c(0,0,1,-1),

"Class A-vs-Class B"=c(1/2,1/2,-1/2,-1/2)

)

estimatedContrasts = contrast(em, method=ourContrasts)The method unsurprisingly yields identical test statistics, standard errors, and \(p\)-values as in our manual calculations. We are usually more interested in confidence intervals for contrast estimates than we are in \(t\)-values and test results. Conveniently, the confint() function takes a contrast() result directly, and confint(estimatedContrasts) yields 95%-confidence intervals for our contrasts by default.

5.1.5 Orthogonal contrasts and ANOVA decomposition

There is no limit on the number of contrasts that we might estimate for any set of data. On the other hand, contrasts are linear combinations of the \(k\) group means and we also need to estimate the grand mean.2 That means that we can find exactly \(k-1\) contrasts that exhaust the information available in the group means and any additional contrast can be calculated from results of the \(k-1\) known contrasts. This idea is made formal by saying that two contrasts \(\Psi(\mathbf{w})\) and \(\Psi(\mathbf{v})\) are orthogonal if \[ \sum_{i=1}^k \frac{w_i\cdot v_i}{n_i} = 0\;. \] This requirement reduces to the more interpretable ‘usual’ orthogonality condition \(\sum_i w_i\cdot v_i = 0\) for a balanced design.

Our three example contrasts are all pairwise orthogonal; for example, we have \(\sum_i w_{1,i}\cdot w_{2,i}=(+1\cdot 0)+(-1\cdot 0)+(0\cdot +1)+(0\cdot -1)=0\) for the first and second contrast. With \(k=4\), only three contrasts can be mutually orthogonal and our three contrasts thus fully exhaust the available information.

If two contrasts \(\Psi(\mathbf{w})\) and \(\Psi(\mathbf{v})\) are orthogonal, then the associated null hypotheses \[ H_0: \Psi(\mathbf{w})=0 \quad \text{and} \quad H_0: \Psi(\mathbf{v})=0 \] are logically independent in the sense that we can learn nothing about one being true or false by knowing the other being true or false.3

Orthogonal contrasts provide a systematic way to ensure that the data of an experiment is fully exhausted in the analysis. In practice, scientific questions are sometimes more directly addressed by sets of non-orthogonal contrasts that also fully exhaust the data. They are often preferred for their easier interpretation and closer link to the scientific question, even though their hypothesis tests might be logically dependent and contain redundancies.

A set of \(k-1\) orthogonal contrasts decomposes the treatment sum of squares into \(k-1\) contrast sums of squares. The sum of squares of a contrast \(\Psi(\mathbf{w})\) are \[ \text{SS}_\mathbf{w} = \frac{\left(\sum_{i=1}^k w_i\hat{\mu}_i\right)^2}{\sum_{i=1}^kw_i^2/n_i}\;, \] and each contrast has one degree of freedom: \(\text{df}_\mathbf{w}=1\).

The contrast sums of squares add exactly to the treatment sum of squares, and we can use an \(F\)-test with 1 numerator and \(N-k\) denominator degrees of freedom for testing the null hypothesis \(H_0: \Psi(\mathbf{w})=0\); this test is equivalent to the corresponding \(t\)-test. The \(F\)-statistic is \[ F = \frac{\text{SS}_\mathbf{w}/1}{\hat{\sigma}_e^2} = \frac{\text{SS}_\mathbf{w}}{\text{MS}_\text{res}}\;. \] In particular, the result of the omnibus \(F\)-test can—in principle—be re-derived from testing \(k-1\) orthogonal contrasts and if the omnibus \(F\)-test is significant, so is at least one of the contrasts in an orthogonal set.

For our third example contrast, we find \(\text{SS}_{\mathbf{w}_3}=\) 0.04. The \(F\)-statistic is therefore \(F=\) 0.04 / 1.48 = 0.03 with \(1\) numerator and 28 denominator degrees of freedom, yielding the previous \(p\)-value of \(p=\) 0.88, and \(\sqrt{F}=\) 0.16 is precisely the previous value of the \(t\)-statistic.

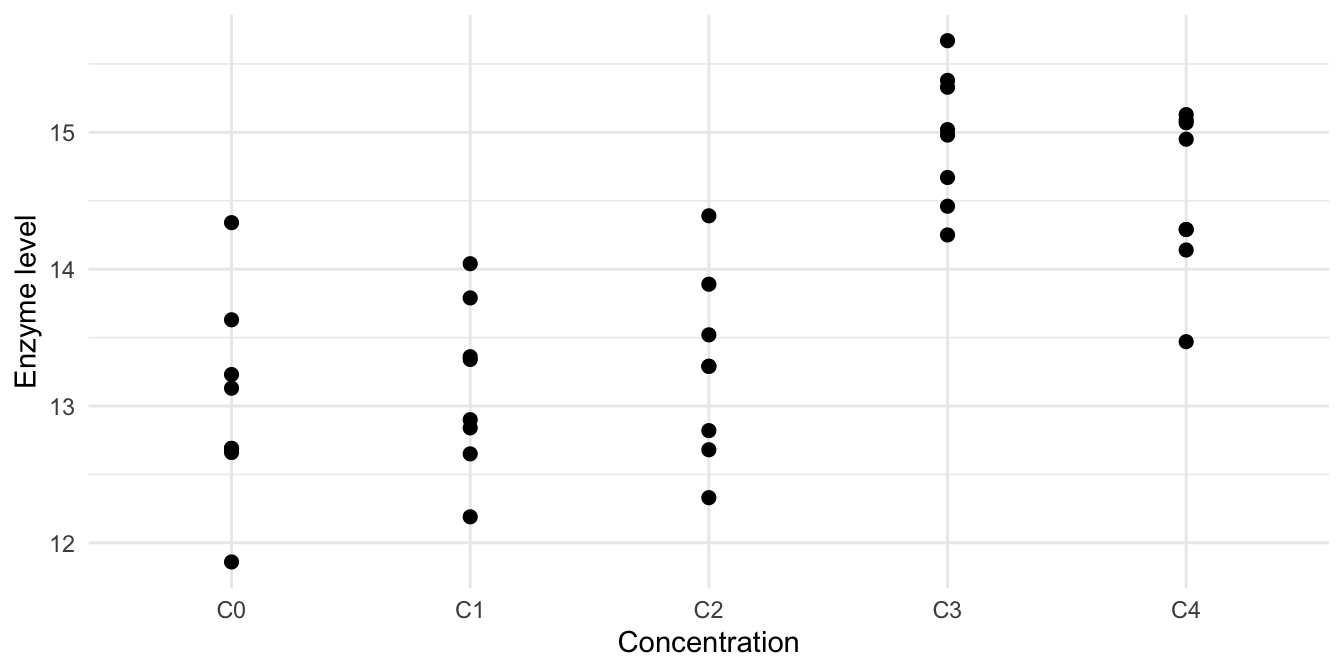

5.1.6 Contrasts for ordered factors

The order of levels of Drug is completely arbitrary: we could just as well put the drugs of Class B as the first two levels and those of Class A as level three and four. For some treatment factors, levels are ordered and level \(i\) is ‘smaller’ than level \(i+1\) in some well-defined sense; such factors are called ordinal. For example, our treatment might consist of our current drug D1 administered at equally spaced concentrations \(C_0<C_1<C_2<C_3<C_4\) (in some arbitrary units). The data for 8 mice per concentration are shown in Figure 5.1 and indicate that the drug has little effect for the first two or three concentrations, then increases substantially and seems to decrease again for higher concentrations.

Figure 5.1: Enzyme levels for five increasing concentrations of drug D1.

Trend analysis using orthogonal polynomials

We can analyze this data using ordinary contrasts, but with ordered treatment factor levels, it makes sense to look for trends. We do this using orthogonal polynomials, which are linear, quadratic, cubic, and quartic polynomials that decompose a potential trends into different components. Orthogonal polynomials are formulated as a special set of orthogonal contrasts. The contrast weight vectors for our example are shown in Table 5.3 (left). Each polynomial contrast measures the similarity of the shape of the data to the pattern described by the weight vector. The linear polynomial measures a upward or downward trend, while the quadratic polynomial measures curvature in the response to the concentrations, such that the trend is not simply a proportional increase or decrease in enzyme level. Cubic and quartic polynomials measure more complex curvature, but become harder to interpret directly. We use the emmeans() and contrast() combination again, and ask for a poly contrast to generate the appropriate set of orthogonal contrasts:

m.conc = aov(y~concentration, data=d.5levels)

em.conc = emmeans(m.conc, ~concentration)

ct.conc = contrast(em.conc, method="poly")

|

|

For our data, we get the result in Table 5.3 (right). We find a highly significant positive linear trend, which means that on average, the enzyme level increases with increasing concentration. The negligible quadratic together with significant cubic and quartic trend components means that there is curvature in the data, but it is changing with the concentration. This reflects the fact that the data show a large increase for the fourth concentration, which then levels off or decreases at the fifth concentration, leading to a sigmoidal pattern.

Time trends

Another typical example for an ordered treatment factor is time. We have to be careful about the experimental design, however. For example, measuring the enzyme levels at four timepoints using different mice per timepoint can be analysed using orthogonal polynomials and an analysis of variance approach. This is because each mouse can be randomly allocated to one timepoint, yielding a completely randomized design. If, however, the design is such that the same mice are measured at four different timepoints, then the assumption of random assignment is violated and analysis of variance with contrasts is no longer a viable analysis option.4 This type of longitudinal design, where the same unit is followed over time, requires more specialized methods to capture the inherent correlation between timepoints.

Minimal effective dose

Another example of a contrast useful for ordered factors are the orthogonal Helmert contrasts. They compare the second level to the first, the third level to the average of the first and second level, the fourth level to the average of the preceding three, and so forth.

Helmert contrasts can be used for finding a minimal effective dose in a dose-response study. Since doses are typically in increasing order, we first test the second-lowest against the lowest does. If the corresponding average responses cannot be distinguished, we assume that no effect of relevant size is present (provided the experiment is not underpowered). We then treat the two doses as equal and use their common average to compare against the third-smallest dose, thereby increasing the precision.

Helmert contrasts are not directly available in emmeans, but the package manual tells us how to define them ourselves:

helmert.emmc = function(ordered.levels, ...) {

# Use built-in R contrast to find contrast matrix

contrast.matrix = as.data.frame(contr.helmert(ordered.levels))

# Provide useful name for each contrast

names(contrast.matrix) = paste(ordered.levels[-1],"vs lower")

# Provide name of contrast set

attr(contrast.matrix, "desc") = "Helmert contrasts"

return(contrast.matrix)

}In our example data in Figure 5.1, the concentration \(C_3\) shows a clear effect. The situation is much less clear-cut for lower concentrations \(C_0,\dots C_2\); there might be a hint of linear increase, but this might be due to random fluctuations. Using the Helmert contrasts

yields the results in Table 5.4. Concentrations \(C_0, C_1, C_2\) show no discernible differences, while enzyme levels increase significantly for concentration \(C_3\), indicating that the minimal effective dose is between concentrations \(C_2\) and \(C_3\).

|

|

5.1.7 Effect size measure

Similarly to our previous discussions for simple differences and omnibus \(F\)-tests, we might sometimes profit from a standardized effect size measure for a linear contrast \(\Psi(\mathbf{w})\), which provides the size of the contrast in units of standard deviation.

A first idea is to directly generalize Cohen’s \(d\) by \(\Psi(\mathbf{w})/\sigma_e\) and measure the contrast estimate in units of the standard deviation. A problem with this approach is that the measure still depends on the weight vector: if \(\mathbf{w}=(w_1,\dots,w_k)\) is the weight vector of a contrast, then we can define an equivalent contrast using, for example, \(\mathbf{w}'=2\cdot\mathbf{w}=(2\cdot w_1,\dots,2\cdot w_k)\). Then, \(\Psi(\mathbf{w}')=2\cdot\Psi(\mathbf{w})\), and the above standardized measure also scales accordingly. In addition, we would like our standardized effect measure to equal Cohen’s \(d\) if the contrast describes a simple difference between two groups.

Both problems are resolved by Abelson’s standardized effect size measure \(d_\mathbf{w}\) (Abelson and Prentice 1997): \[ d_\mathbf{w} = \sqrt{\frac{2}{\sum_{i=1}^k w_i^2}}\cdot \frac{\Psi(\mathbf{w})}{\sigma_e} \;\text{estimated by}\; \hat{d}_\mathbf{w}=\sqrt{\frac{2}{\sum_{i=1}^k w_i^2}}\cdot \frac{\hat{\Psi}(\mathbf{w})}{\hat{\sigma}_e}\;. \]

For our scaled contrast \(\mathbf{w}'\), we find \(d_\mathbf{w'}=d_\mathbf{w}\) and the standardized effect sizes for \(\mathbf{w}'\) and \(\mathbf{w}\) coincide. For a simple difference \(\mu_i-\mu_j\), we have \(w_i=+1\) and \(w_j=-1\), all other weights being zero, and thus \(\sum_{i=1}^k w_i^2=2\), reducing \(d_\mathbf{w}\) to Cohen’s \(d\).

5.1.8 Power analysis and sample size for linear contrasts

The power calculations for linear contrasts can be done based on the contrast estimate and its standard error, which requires calculating the power from the noncentral \(t\)-distribution. We follow the equivalent approach based on the contrast’s sum of squares and the residual variance, which requires calculating the power from the noncentral \(F\)-distribution.

Exact calculation

The noncentrality parameter \(\lambda\) of the \(F\)-distribution for a contrast is given as \[ \lambda = \frac{\Psi(\mathbf{w})^2}{\text{Var}(\hat{\Psi}(\mathbf{w}))} = \frac{\left(\sum_{i=1}^k w_i\cdot \mu_i\right)^2}{\frac{\sigma^2}{n}\sum_{i=1}^k w_i^2} = n\cdot 2\cdot \left(\frac{d_\mathbf{w}}{2}\right)^2\;. \] Note that the last term has exactly the same \(\lambda=n\cdot k\cdot f^2\) form that we encountered previously, since a contrast uses \(k=2\) (sets of) groups, and for direct group comparisons, we know that \(f^2=d^2/4\).

From the noncentrality parameter, we can calculate the power for testing the null hypothesis \(H_0: \Psi(\mathbf{w})=0\) for any given significance level \(\alpha\), residual variance \(\sigma_e^2\), sample size \(n\), and assumed real value of the contrast \(\Psi_0\), respectively the assumed standardized effect size \(d_{\mathbf{w},0}\). We calculate the power using our getPowerF() function and increase \(n\) until we reach the desired power.

For our first example contrast \(\mu_1-\mu_2\), we find \(\sum_i w_i^2=2\). For a minimal difference of \(\Psi_0=\) 2 and using our residual variance estimate \(\hat{\sigma}_e^2=\) 1.5, we calculate a noncentrality parameter of \(\lambda=n\cdot\) 1.33. The numerator degree of freedom is df1=1 and the denominator degrees of freedom are df2=n*4-4. For a significance level of \(\alpha=5\%\) and a desired power of \(1-\beta=80\%\), we find a required sample size of \(n=\) 7. We arrive at a very conservative estimate by replacing the residual variance by the upper confidence limit \(\text{UCL}=\) 2.74 of its estimate. This increases the required sample size to \(n=\) 12. The sample size increases substantially to \(n=\) 95 if we want to detect a much smaller contrast value of \(\Psi_0=0.5\).

Similarly, our third example contrast \((\mu_1+\mu_2)/2 - (\mu_3+\mu_4)/2\) has \(\sum_i w_i^2=1\). A minimal value of \(\Psi_0=2\) can be detected with 80% power at a 5% significance level for a sample size of \(n=\) 4 per group, with an exact power of 0.85 (for \(n=\) 3 the power is 0.7). Even though the desired minimal value is identical to the first example contrast, we need less samples since we are comparing the average of two groups to the average of two other groups, making the estimate of the difference more precise.

Portable power

We apply the same ideas as for the omnibus \(F\)-test for making the power analysis for linear contrasts portable. The numerator degrees of freedom for a contrast \(F\)-test is \(\text{df}_\text{num}=1\). Thus, \(\phi^2=\lambda/2\) and we find a sample size formula of \[ n = \frac{2\cdot\phi^2\cdot\sigma_e^2\cdot\sum_{i=1}^k w_i^2}{\Psi_0^2}\;. \] For a simple difference contrast, \(\sum_i w_i^2=2\), we have \(\Psi_0=\delta_0\). With the approximation \(\phi^2\approx 4\) for a power of 80% at a 5% significance level and \(k=2\) factor levels, we derive our old formula again: \(n=16/(\Psi_0/\sigma_e)^2\).

For our first example contrast with \(\Psi_0=2\), we find an approximate sample size of \(n\approx\) 11 in reasonable agreement with the exact sample size of \(n=\) 7. If we instead ask for a minimal difference of \(\Psi_0=0.5\), this number increases to \(n\approx\) 175 mice per drug treatment group (exact: \(n=\) 95).

The sample size is lower for a contrast between averages of two group means. For our third example contrast with weights \(\mathbf{w}=(1/2, 1/2, -1/2, -1/2)\) we find \(n=8/(\Psi_0/\sigma_e)^2\). With \(\Psi_0=2\), this gives an approximate sample size of \(n\approx\) 5 (exact: 4).

5.2 Multiple comparions and post-hoc analyses

5.2.1 Introduction

Planned and post-hoc contrasts

The use of orthogonal contrasts—pre-defined before the experiment is conducted—provides no further difficulty in the analysis. They are, however, often not sufficient for an informative analysis: we may want to use more than \(k-1\) planned contrasts as part of our pre-defined analysis strategy. In addition, exploratory experiments lead to post-hoc contrasts that are based on results observed after the experiment is conducted. Post-hoc contrasts also play a role in carefully planned and executed confirmatory experiments, when peculiar patterns emerge that were not anticipated, but require further investigation.

We must carefully distinguish between planned and post-hoc contrasts: the first case just points to the limitations of independent pre-defined contrasts, but the contrasts and hypotheses are still pre-defined at the planning stage of the experiment and before the data is in. In the second case, we ‘cherry-pick’ interesting results from the data, after the experiment is done and we inspected the results. This course of action increases our risk of finding false positives. A common example of a post-hoc contrasts occurs when looking at all pair-wise comparisons of treatments and defining the difference between the largest and smallest group mean as the only contrast of interest. Since there is always one pair with greatest difference, it is incorrect to use a standard \(t\)-test for this contrast. Rather, the resulting \(p\)-value needs to be adjusted for the fact that we cherry-picked our contrast, making it larger on average than the contrast of any randomly chosen pair. Properly adjusted tests for post-hoc contrasts have lower power than those of pre-defined planned contrasts, because adjusting for the selection of “interesting” patterns inflates the quantiles of the null distribution and pushes the rejection region outwards.

The problem of multiple comparisons

Whether we are testing several pre-planned contrasts or post-hoc contrasts of some kind, we also have to adjust our analysis for multiple comparisons.

Imagine a scenario where we are testing \(q\) hypotheses, from \(q\) contrasts, say. We want to control the false positive probability using a significance level of \(\alpha\) for each individual test. The probability that any individual test falsely rejects the null hypothesis of a zero difference is then \(\alpha\). Now imagine that all \(q\) null hypotheses are true. Even then, the probability that at least one of the tests will incorrectly reject its null hypothesis is not \(\alpha\), but rather \[ 1-(1-\alpha)^q\;. \] For \(q=200\) contrasts and a significance level of \(\alpha=5\%\), for example, the probability of at least one incorrect rejection is 99.9965%, a near certainty5. Indeed, the expected number of false positives, given that all 200 null hypotheses are true, is \(200\cdot \alpha=10\). Even if we only test \(q=5\) hypotheses, the probability of an incorrect rejection is 22.6% and thus still substantially larger than the desired false positive probability.

The probability of at least one false positive in a family of tests (the family-wise or experiment-wise error probability) increases with the number of tests, and in any case exceeds the individual test’s significance level \(\alpha\). This is known as the multiple testing problem. In essence, we have to decide which error we want to bound: is it the individual error per hypothesis or is it the overall error of at least one incorrect declaration of significance in the whole family of hypotheses? An insightful discussion of the problem of multiple comparisons and error control can be found in (Tukey 1991).

A multiple comparison procedure (MCP) is an algorithm that computes adjusted significance levels for each individual test, such that the overall error probability is bound by a pre-defined probability threshold. There are several types of error probability6 and each usually comes with different MCPs. We focus on several procedures for controlling the family-wise error probability, the most relevant for our purposes. Some of the procedures are universally applicable, while others a predicated on specific classes of hypotheses (such as comparing all pairs), but offer more power than more general procedures. Specifically, (i) the universal Bonferroni-Holm correction provides control for all scenarios of pre-defined hypotheses; (ii) Tukey’s honest significant difference (HSD) provides control for testing all pairs of groups; (iii) Dunnett’s method covers the case of comparing each group to a common control group; and (iv) Scheffé’s method gives confidence intervals and tests for any post-hoc comparisons suggested by the data.

These methods apply generally for multiple hypotheses, but we focus on testing \(q\) contrasts \(\Psi(\mathbf{w}_l)\) with \(q\) null hypotheses \[ H_{0,l}: \Psi(\mathbf{w}_l)=0 \] where \(\mathbf{w}_l=(w_{1l},\dots,w_{kl})\) is the weight vector describing the \(l\)th contrast, and \(l=1\dots q\). For example, for \(k\) groups we might have the contrasts for all \(k\cdot(k-1)/2\) pair-wise group comparisons \[ \Psi(\mathbf{w}_1) = \mu_1-\mu_2\,,\quad\cdots\,,\quad\; \Psi(\mathbf{w}_{k(k-1)/2}) = \mu_{k-1}-\mu_k\;. \]

Adjustment in R

There are several options to use these methods in R, some in base-R and others spread over various packages. We again use the emmeans package, and follow the same strategy as before: estimate the model parameters using aov() and estimate the group means using emmeans(). We then use the contrast() function with an adjust= argument to choose a multiple correction procedure \(p\)-values and confidence intervals of contrasts.

5.2.2 Bonferroni-Holm correction for general purpose

The Bonferrroni correction is a popular and simple method for controlling the family-wise error probability by simply adjusting each test’s individual significance level from \(\alpha\) to \(\alpha/q\). It is very conservative: it will lead to quite small significance levels for the individual tests, often much smaller than necessary.

The simple Bonferroni method is a single-step procedure to control the family-wise error probability by adjusting the individual significance level from \(\alpha\) to \(\alpha/q\). It does not consider the observed data and rejects the null hypothesis \(H_0: \Psi(\mathbf{w}_l)=0\) if the contrast exceeds the critical value based on the adjusted \(t\)-quantile: \[ \left|\hat{\Psi}(\mathbf{w}_l)\right| > t_{1-\alpha/2q, N-k}\cdot\hat{\sigma}\cdot \sqrt{\sum_{i=1}^k\frac{w_{il}^2}{n_i}}\;. \] It is easily applied to existing test results: just multiply the original \(p\)-values by the number of tests \(q\) and declare a test significant if this adjusted \(p\)-value stays below the original significance level \(\alpha\).

For our three example contrasts, we previously found unadjusted \(p\)-values of \(0.019\) for the first, \(0.875\) for the second, and \(10^{-10}\) for the third contrast. The Bonferroni adjustment consists of multiplying each by \(q=3\), resulting in adjusted \(p\)-values of \(0.057\) for the first, \(2.626\) for the second (which we cap at \(1.0\)), and \(3\times 10^{-10}\) for the third contrast, moving the first contrast from significant to not significant at the \(\alpha=10\%\) level. We achieve the same result (Table 5.5) by using the adjust= option, and we may alternatively use confint() on the results for an estimation rather than testing result table:

| contrast | estimate | SE | df | t.ratio | p.value |

|---|---|---|---|---|---|

| D1-vs-D2 | 1.51 | 0.61 | 28 | 2.49 | 0.06 |

| D3-vs-D4 | -0.10 | 0.61 | 28 | -0.16 | 1.00 |

| Class A-vs-Class B | 4.28 | 0.43 | 28 | 9.96 | 0.00 |

The Bonferroni-Holm method is based on the same assumptions, but uses a multi-step procedure to find an optimal significance level based on the observed data. This increases its power compared to the simple procedure. Let us call the unadjusted \(p\)-values of the \(q\) hypotheses \(p_1,\dots,p_q\). The method first sorts the hypotheses by observed \(p\)-value such such that \(p_{(1)}<p_{(2)}<\cdots <p_{(q)}\) and \(p_{(i)}\) is the \(i\)th smallest observed \(p\)-value. It then finds the smallest index \(j\) such that

\[

p_{(j)} > \frac{\alpha}{q+1-j}\;,

\]

and any hypothesis \(H_{0,i}\) for which \(p_i<p_{(j)}\) is declared significant. We apply this method using the adjust="holm" option.

5.2.3 Tukey’s honest significant difference (HSD) for pairwise comparisons

We gain more power if the set of contrasts has more structure and Tukey’s method is designed for the common case of all pairwise differences. It considers the distribution of the studentized range, the difference between the maximal and minimal group means by calculating honest significant differences (HSD) (John W. Tukey 1949). It requires a balanced design and rejects \(H_{0,l}: \Psi(\mathbf{w}_l) = 0\) if \[ \left|\hat{\Psi}(\mathbf{w}_l)\right| > q_{1-\alpha,k-1,N}\cdot\hat{\sigma_e}\cdot \sqrt{\frac{1}{2}\sum_{i=1}^k\frac{w_{il}^2}{n}} \quad\text{that is}\quad |\hat{\mu}_i-\hat{\mu}_j| > q_{1-\alpha,k-1,N}\cdot\frac{\hat{\sigma}_e}{\sqrt{n}}\;, \] where \(q_{\alpha,k-1,N}\) is the \(\alpha\)-quantile of the studentized range based on \(k\) groups and \(N=n\cdot k\) samples.

We calculate Tukey’s adjustment using the option adjust="tukey", and it is the default adjustment when using method="pairwise" to define the contrasts.

The result is shown in Table 5.6 (left) for our example. Since the difference between the two drug classes is very large, all but the two comparisons within each class yield highly significant estimates, but neither difference of drugs in the same class is significant after Tukey’s adjustment.

5.2.4 Dunnett’s method for comparisons against a reference

Another very common type of biological experiment uses a control group and inference focuses on comparing each treatment with this control group, leading to \(k-1\) contrasts. These contrasts are not orthogonal, and the required adjustment is provided by Dunnett’s method (Dunnett 1955). It rejects the null hypothesis \(H_{0,i}: \mu_i-\mu_1=0\) that treatment group \(i\) shows no difference to the control group 1 if \[ |\hat{\mu}_i-\hat{\mu}_1| > d_{1-\alpha, k-1, N-k}\cdot\hat{\sigma}_e\cdot\sqrt{\frac{1}{n_i}+\frac{1}{n_1}}\;, \] where again \(d_{1-\alpha, k-1, N-k}\) is the quantile of the appropriate distribution for this test.

For our example, let us assume that drug D1 is the best current treatment option, and we are interested in comparing the alternatives D2 to D4 to this reference. The required contrasts are generated when we use the method="trt.vs.ctrl1" option and the option adjust="dunnett" provides the MCP adjustment for these contrasts, resulting in Table 5.6 (right).

|

|

Unsurprisingly, enzyme levels for drug D2 are barely distinguishable from those of the reference drug D1, and D3 and D4 show very different responses than the reference. Again, using confint() with these results provides adjusted confidence limits.

5.2.5 Scheffé’s method for general purpose and post-hoc contrasts

The method by Scheffé is suitable for testing any group of contrasts, even if they were suggested by the data (Scheffe 1959); in contrast, most other methods are restricted to pre-defined contrasts. Naturally, this freedom of cherry-picking comes at a cost: the Scheffé method is extremely conservative (so effects have to be huge to be deemed significant), and is therefore only used if no other method is applicable.

The Scheffé method rejects the null hypothesis \(H_{0,l}\) if \[ \left|\hat{\Psi}(\mathbf{w}_l)\right| > \sqrt{(k-1) \cdot F_{\alpha,k-1,N-k}} \cdot \hat{\sigma} \cdot \sqrt{\sum_{i=1}^k \frac{w_{il}^2}{n_i}}\;. \] This is very similar to the Bonferroni correction, except that the number of contrasts \(q\) is irrelevant, and the quantile is a scaled quantile of an \(F\)-distribution rather than a quantile from a \(t\)-distribution.

For illustration, imagine that our three example contrasts were not planned before the experiment, but rather suggested by the data after the experiment was completed. The following R code gives the adjusted results in Table 5.7.

We notice that the \(p\)-values are much more conservative than with any other method, which reflects the added uncertainty due to the post-hoc nature of the contrasts.

| contrast | estimate | SE | df | t.ratio | p.value |

|---|---|---|---|---|---|

| D1-vs-D2 | 1.51 | 0.61 | 28 | 2.49 | 0.13 |

| D3-vs-D4 | -0.10 | 0.61 | 28 | -0.16 | 1.00 |

| Class A-vs-Class B | 4.28 | 0.43 | 28 | 9.96 | 0.00 |

5.2.6 Some final remarks on multiple comparisons

There is no general agreement on when multiple comparison corrections are necessary in statistical analyses and how strict or conservative they should be. Some eminent people argue that multiple comparison adjustments are rarely called for in designed experiments (Finney 1988,@OBrien1983), while others spent their career on clarifying the issue (Rupert Jr 2012). In general, however, results not adjusted for multiple comparisons are unlikely to be accepted without further elaboration

On the one extreme, it is relatively uncontroversial that sets of post-hoc contrasts always require an appropriate adjustment of the significance levels to achieve reasonable credibility of the analysis. On the other extreme, planned orthogonal contrasts simply partition the treatment factor sum of squares and their hypotheses are independent, so they are usually not adjusted.

Many reasonable sets of contrast are more nuanced than “every pair” or “everyone against the reference” but still have more structure than an arbitrary set of contrasts. Specialized methods might not exist, but general methods such as Bonferroni-Holm are likely overly conservative. The analyst’s discretion is then required and the most important aspect is to honestly report what was done, such that other scientists can correctly appraise the evidence in the data.

5.3 A real-life example

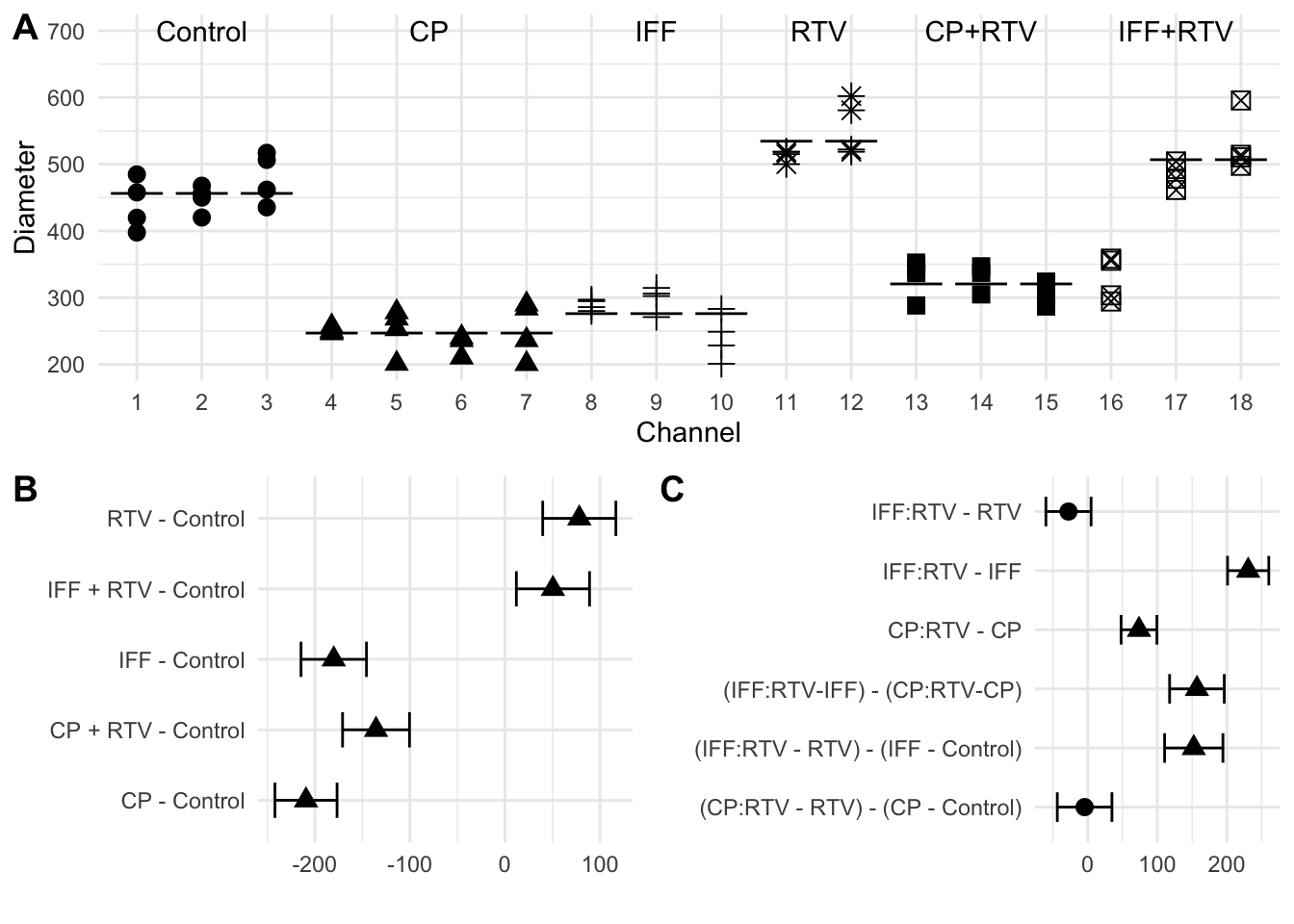

We further illustrate the one-way ANOVA and use of linear contrasts using a real-life example.7 The two anticancer drugs cyclophosphamie (CP) and Ifosfamide (IFF) become active in the human body only after metabolization in the liver by the enzyme CYP3A4, among others. The function of this enzyme is inhibited by the drug Ritanovir (RTV), which more strongly affects metabolization of IFF than CP. The experimental setup consisted of several microfluidic devices with a total of 18 independent channels; each channel contained a co-culture of multi-cellular liver spheroids for metabolization and tumor spheroids for measuring drug action.

The experiment used the diameter of the tumor (in \(\mu m\)) after 12 days as the response variable and six treatment groups: a control condition without drugs, a second control condition with RTV alone, and the four conditions CP only, IFF only, CP+RTV, and IFF+RTV. The resulting data are shown in Figure 5.2(A) for each channel.

A preliminary analysis revealed that device-to-device variation and variation from channel to channel were negligible compared to the within-channel variance, and these two factors were consequently ignored in the analysis. Thus, data are pooled over the channels for each treatment group and the experiment is analyzed as a one-way ANOVA.

Inhomogeneous variance

The omnibus \(F\)-test and linear contrast analysis require equal within-group variances between treatment groups. This hypothesis was tested using the Levene test and a \(p\)-value below 0.5% indicated that variances might differ substantially. If true, this would complicate the analysis. Looking at the raw data in Figure 5.2(A), however, reveals a potential error in channel 16, which was labelled as IFF+RTV, but shows tumor diameters in excellent agreement with the neighboring CP+RTV treatment. Including channel 16 in the IFF+RTV group massively inflates the variance estimate. It was therefore decided to remove channel 16 from further analysis, the hypothesis of equal variances is then no longer rejected (\(p>0.9\)), and visual inspection of the data confirms that dispersions are very similar between channels and groups.

Analysis of variance

As is expected from looking at the data in Figure 5.2(A), the one-way analysis of variance of tumor diameter versus treatment results in a highly significant treatment effect.

| Df | Sum Sq | Mean Sq | F value | Pr(>F) | |

|---|---|---|---|---|---|

| Condition | 5 | 815205 | 163041 | 154.5 | 6.338e-33 |

| Residuals | 60 | 63303 | 1055 |

The effect sizes are an explained variation of \(\eta^2=\) 93%, a standardized effect size of \(f^2=\) 12.88, and a raw effect size of \(b^2=\) 1.35891310^{4} (an average deviation between group means and general mean of \(b=\) 116.57)

Linear contrasts

Since the \(F\)-test does not elucidate which groups differ and by how much, we proceed with a more targeted analysis using linear contrasts to estimate and test meaningful and interpretable comparisons. With a first set of standard contrasts, we compare each treatment group to the control condition. The resulting contrast estimates are shown in Figure 5.2(B) together with their Dunnett-corrected 95%-confidence intervals.

Somewhat surprisingly, the RTV-only condition shows tumor diameters significantly larger than those under the control condition, indicating that RTV alone influences the tumor growth. Both conditions involving CP show reduced tumor diameters, indicating that CP inhibits tumor growth, as does IFF alone. Lastly, RTV seems to substantially decrease the efficacy of IFF, leading again to tumor diameters larger than under the control condition, but (at least visually) comparable to the RTV condition.

The large and significant difference between control and RTV-only poses a problem for the interpretation: we are interested in comparing CP+RTV against CP-only and similarly for IFF. But CP+RTV could be a combined effect of tumor diameter reduction by CP (compared to control) and increase by RTV (compared to control). We have two options for defining a meaningful contrast: (i) estimate the difference in tumor diameter between CP+RTV and CP. This is a comparison between the combined and single drug actions. Or (ii) estimate the difference between the change in tumor diameter from CP to control to the change from CP+RTV to RTV (rather than to control). This is a comparison between the baseline tumor diameters under control and RTV to those under addition of CP and is the net-effect of CP (provided that RTV increases tumor diameters equally with and without CP).

The two sets of comparisons lead to different contrasts, but both are meaningful for these data. The authors of the study decided to go for the first type of comparison and compared tumor diameters for each drug with and without inhibitor. The two contrasts are \(\text{IFF:RTV}-\text{IFF}\) and \(\text{CP:RTV}-\text{CP}\), shown in rows 2 and 3 of Figure 5.2(C). Both contrasts show a large and significant increase in tumor diameter in the presence of the inhibitor RTV, where the larger loss of efficacy for IFF yields a more pronounced difference.

For a complete interpretation, we are also interested in comparing the two differences between the CP and the IFF conditions: is the reduction in tumor diameter under CP smaller or larger than under IFF? This question addresses a difference of differences, a very common type of comparison in biology, when different conditions are contrasted and a ‘baseline’ or control is available for each condition. We express this question as \((\text{IFF:RTV}-\text{IFF}) - (\text{CP:RTV}-\text{CP})\), and we can sort the terms to derive the contrast form \((\text{IFF:RTV}+\text{CP}) - (\text{CP:RTV}+\text{IFF})\). Thus, we use a weight of \(+1\) for the treatment groups IFF+RTV and CP, and a weight of \(-1\) for the groups CP+RTV and IFF. The estimated contrast and confidence interval is shown in the fourth row in Figure 5.2(C): the two increases in tumor diameter under co-administered RTV are significantly different, with about 150 \(\mu m\) more under IFF than under CP.

For comparison, the remaining two rows in Figure 5.2(C) show the comparison of tumor diameter increase for each drug with and without inhibitor, where the no-inhibitor condition is compared to the control condition, but the inhibitor condition is compared to the RTV-only condition. Now, IFF shows less increase in tumor diameter than in the previous comparison, but the result is still large and significant. In contrast, we do not find a difference between the CP and CP+RTV conditions indicating that loss of efficacy for CP is only marginal under RTV. This is because the previously observed difference can be explained by the difference in ‘baseline’ between control and RTV-only. The contrasts are constructed as before: for CP, the comparison is \((\text{CP:RTV}-\text{RTV}) - (\text{CP}-\text{Control})\) which is equivalent to \((\text{CP:RTV}+\text{Control}) - (\text{RTV} + \text{CP})\).

Figure 5.2: A: Observed diameters by channel. Point shape indicates treatment. Channel 16 appears to be mislabelled. B: Estimated treatment-versus-control contrasts and Dunnett-adjusted 95%-confidence intervals based on data excluding channel 16, significant contrasts are indicated as triangles. C: As (B) for specific (unadjusted) contrasts of interest.

Conclusion

What started as a seemingly straightforward one-way ANOVA turned into a much more intricate analysis. Only the direct inspection of the raw data revealed the source of the heterogeneous treatment group variances. The untestable assumption of a mislabelling and removal of the data from channel 16 still led to a straightforward omnibus \(F\)-test and ANOVA table.

Great care is also required in the more detailed contrast analysis. While the RTV-only condition was initially thought to provide another control condition, a comparison with the empty control revealed a systematic and substantial difference, with RTV-only showing larger tumor diameters. Two sets of contrasts are then plausible, using different ‘baseline’ values for estimating the effect of RTV in conjunction with a drug. Both sets of contrasts have straightforward interpretations, but which set is more meaningful depends on the biological question. Note that the important contrast of tumor diameter differences between inhibited and uninhibited conditions compared between the two drugs is independent of this decision.

5.4 Appendix

5.4.1 Using orthogonal contrasts in aov() directly

Base-R allows providing a set of orthogonal contrasts for the treatment factor, and tell aov() to directly calculate and show the contrast sum of squares and their tests. The procedure is somewhat more complicated than one would expect, but alright then:

- create a \(k\times k\) matrix \(A\) whose first row is \((1/k,\dots,1/k)\) to encodes the grand mean;

- add the weight vector \(\mathbf{w}\) of each orthogonal contrast as a row to \(A\) (any order will do);

- invert \(A\) to get \(A^{-1}\) using

solve(); - remove the first column of \(A^{-1}\) to remove the grand mean;

- the resulting \((k-1)\times k\) matrix encodes the contrasts and gets attached to the dataframe with

contrasts()8.

The following code illustrates this procedure for our three example contrasts for the drug column in the dataframe d. The resulting contrast matrix \(A^{-1}=\) Ainv is shown in Table 5.8.

# Define contrast weight matrix

A = rbind(

c(1/4, 1/4, 1/4, 1/4),

c(1, -1, 0, 0),

c(0, 0, 1, -1),

c(1/2, 1/2, -1/2, -1/2)

)

# Invert contrast matrix

Ainv = solve(A)

# Remove first column

Ainv = Ainv[ , -1]

# Add row and column names

colnames(Ainv) = c("D1-vs-D2", "D3-vs-D4", "Class A-vs-Class B")

rownames(Ainv) = c("D1","D2","D3","D4")

# Attach to factor

contrasts(d$drug) = Ainv| D1-vs-D2 | D3-vs-D4 | Class A-vs-Class B | |

|---|---|---|---|

| D1 | 0.5 | 0.0 | 0.5 |

| D2 | -0.5 | 0.0 | 0.5 |

| D3 | 0.0 | 0.5 | -0.5 |

| D4 | 0.0 | -0.5 | -0.5 |

We can now run aov(), where we explicitly mention that we want to see the contrasts in the result using the split= argument. It needs a list with one entry for each treatment factor, and each entry is a list of names for the output, associated with the first, second, and so on orthogonal contrast.

m.ct = aov(y~drug, data=d)

sm.ct.aov = summary(m.ct, split=list(drug=c("D1-vs-D2"=1,

"D3-vs-D4"=2,

"Class A-vs-Class B"=3)))The result is

## Df Sum Sq Mean Sq F value Pr(>F)

## drug 3 155.89 51.96 35.170 1.24e-09 ***

## drug: D1-vs-D2 1 9.18 9.18 6.214 0.0189 *

## drug: D3-vs-D4 1 0.04 0.04 0.025 0.8753

## drug: Class A-vs-Class B 1 146.68 146.68 99.272 1.04e-10 ***

## Residuals 28 41.37 1.48

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1There is one row showing the omnibus \(F\)-test and the degrees of freedom and sum of squares, followed by its partition into three orthogonal contrasts. The contrast sum of squares add up to the treatment sum of squares and the individual results accord with our previous calculations. We extract the contrast estimates using the summary.lm() function:

##

## Call:

## aov.default(formula = y ~ drug, data = d)

##

## Residuals:

## Min 1Q Median 3Q Max

## -2.47625 -0.50062 0.04875 0.72969 2.03250

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 11.42906 0.21488 53.189 < 2e-16 ***

## drugD1-vs-D2 1.51500 0.60776 2.493 0.0189 *

## drugD3-vs-D4 -0.09625 0.60776 -0.158 0.8753

## drugClass A-vs-Class B 4.28188 0.42975 9.964 1.04e-10 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 1.216 on 28 degrees of freedom

## Multiple R-squared: 0.7903, Adjusted R-squared: 0.7678

## F-statistic: 35.17 on 3 and 28 DF, p-value: 1.237e-09References

Abelson, Robert P., and Deborah A. Prentice. 1997. “Contrast tests of interaction hypothesis.” Psychological Methods 2 (4): 315–28. https://doi.org/10.1037/1082-989X.2.4.315.

Dunnett, C W. 1955. “A multiple comparison procedure for comparing several treatments with a control.” Journal of the American Statistical Association 50 (272): 1096–1121. http://www.jstor.org/stable/2281208.

Finney, D. J. 1988. “Was this in your statistics textbook? III. Design and analysis.” Experimental Agriculture 24: 421–32.

Rupert Jr, G. 2012. Simultaneous statistical inference. Springer Science & Business Media.

Scheffe, Henry. 1959. The Analysis of Variance. John Wiley & Sons, Inc.

Tukey, John W. 1949. “Comparing Individual Means in the Analysis of Variance.” Biometrics 5 (2): 99–114. http://www.jstor.org/stable/3001913.

Tukey, J W. 1991. “The philosophy of multiple comparisons.” Statistical Science 6: 100–116. http://www.jstor.org/stable/2245714.

We can do this using a ‘contrast’ \(\mathbf{w}=(1/k,1/k,\dots,1/k)\), even though its weights do not sum to zero.↩

A small subtlety arises from estimating the contrasts: since all \(t\)-tests are based on the same estimate of the residual variance, the tests are still statistically dependent. The effect is usually so small that we ignore this subtlety in practice.↩

It is plausible that measurements on the same mouse are more similar between timepoints close together than between timepoints further apart, a fact that ANOVA cannot properly capture.↩

If 200 hypotheses seems excessive, consider a simple microarray experiment: here, the difference in expression level is simultaneously tested for thousands of genes.↩

The false discovery rate is another example.↩

This example is based on an experiment by Christian Lohasz of the Hierlemann group. He kindly allowed using the data for this course; the original analysis is currently in print and any additional analyses in this section are for illustration only.↩

This is not the same function as

contrast()that we use in conjunction withemmeans().↩