Chapter 4 Comparing more than two groups

We extend our discussion from experiments with two treatment groups to experiments with \(k\) treatment groups, assuming completely random treatment allocation. In this chapter, we develop the analysis of variance framework to address the omnibus null hypothesis that all group means are identical and there is no difference between the treatments. We also look at corresponding effect size measures and the associated power analysis, develop the connection to standard linear regression analysis, and introduce Hasse diagrams to provide a direct link between regression model and experiment design.

4.1 Experiment and data

We consider investigating four drugs for their properties to alter the metabolism in mice, and we take the level of our liver enzyme as a biomarker to indicate this alteration, where higher levels are considered as “better”. Metabolization and elimination of the drugs might be affected by the fatty acid metabolism, but for the moment we control this aspect by feeding all mice with the same low fat diet and return to the diet effect in Chapter 6.

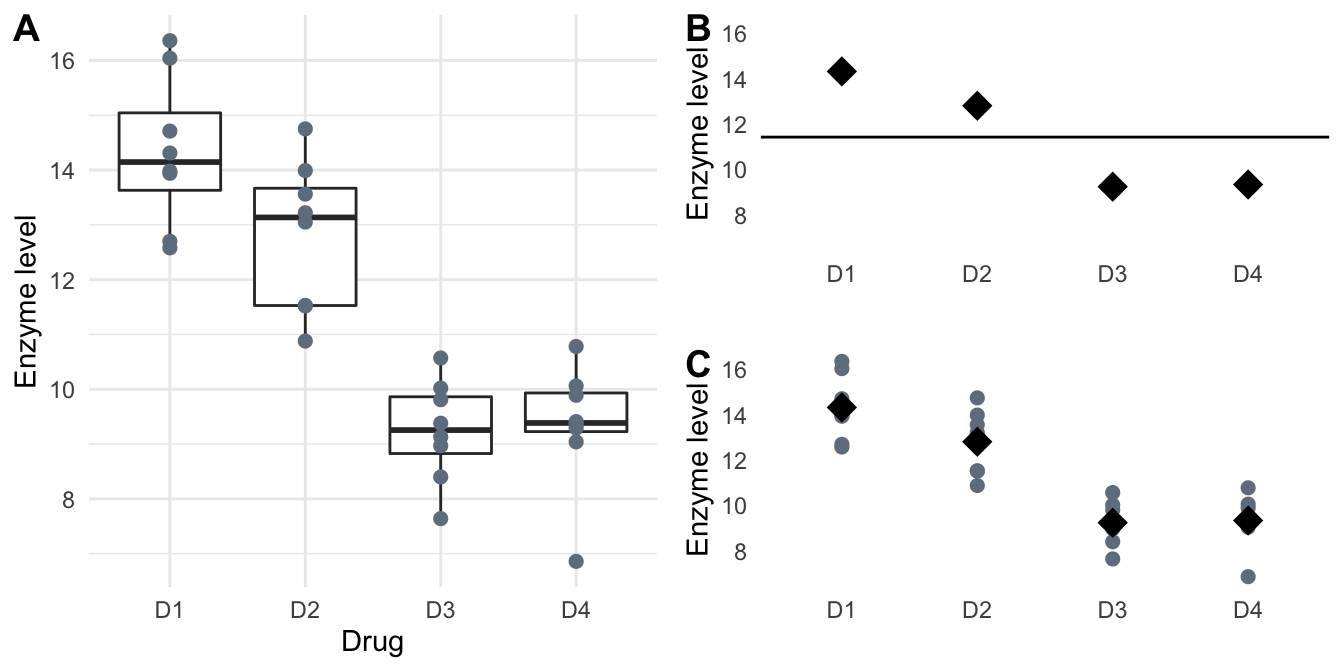

The data in Table 4.1 and Figure 4.1A show the observed enzyme levels for \(N=n\cdot k=\) 32 mice, with \(n=\) 8 mice randomly assigned to one of the four drugs D1, D2, D3, and D4. We denote the four average treatment group responses as \(\mu_1,\dots,\mu_4\); we are interested in testing the omnibus hypothesis \(H_0: \mu_1=\mu_2=\mu_3=\mu_4\) that the group averages are identical and the four drugs therefore have the same effect on the enzyme levels.

Other interesting questions regard estimation and testing of specific treatment group comparisons, but we postpone these to Chapter 5.

| D1 | 13.94 | 16.04 | 12.70 | 13.98 | 14.31 | 14.71 | 16.36 | 12.58 |

| D2 | 13.56 | 10.88 | 14.75 | 13.05 | 11.53 | 13.22 | 11.52 | 13.99 |

| D3 | 10.57 | 8.40 | 7.64 | 8.97 | 9.38 | 9.13 | 9.81 | 10.02 |

| D4 | 10.78 | 10.06 | 9.29 | 9.36 | 9.04 | 9.41 | 6.86 | 9.89 |

Figure 4.1: Observed enzyme levels in response to four drug treatments with eight mice per treatment group. A: Full data with boxplots to indicate summary measures. B: Grand mean (horizontal line) and group means (diamonds) used in estimating the between-group variance. C: Individual responses (grey points) are compared to group means (black diamonds) for estimating the within-group variance.

4.2 One-way analysis-of-variance

In the general case of a balanced completely randomized design with \(k\) treatments randomly allocated to \(n\) experimental units each, we denote the response of the \(j\)th experimental unit in the \(i\)th treatment group by \(y_{ij}\) for \(i=1\dots k\) and \(j=1\dots n\). We assume that the responses \(y_{ij}\sim N(\mu_i,\sigma_e^2)\) are normally distributed with group-specific expectation \(\mu_i\) and common variance \(\sigma_e^2\).

4.2.1 Testing equality of means by comparing variances

For \(k=2\) treatment groups, the omnibus null hypothesis is \(H_0: \mu_1=\mu_2\) and can be tested using a \(t\)-test on the group difference \(\Delta=\mu_1-\mu_2\). For \(k>2\) treatment groups, the corresponding omnibus null hypothesis is \(H_0:\mu_1=\dots =\mu_k\), and the idea of using a difference for testing does not extend to this case.

The crucial observation for deriving a test statistic for the general omnibus null hypothesis comes from changing our perspective on the problem: if the treatment group means \(\mu_i\equiv \mu\) are all equal, then we can consider their estimates \(\hat{\mu}_i=\sum_{j=1}^n y_{ij}/n\) as independent ‘samples’ from a normal distribution with mean \(\mu\) and variance \(\sigma_e^2/n\) (Figure 4.1B). We can then estimate their variance using the usual formula \[ \widehat{\text{Var}}(\hat{\mu}_i) = \sum_{i=1}^k (\hat{\mu}_i-\hat{\mu})^2/(k-1)\;, \] where \(\hat{\mu}=\sum_{i=1}^k \hat{\mu}_i/k\) is an estimate of the common mean. Since \(\text{Var}(\hat{\mu}_i)=\sigma_e^2/n\), this provides us with an estimator \[ \tilde{\sigma}_e^2=n\cdot\widehat{\text{Var}}(\hat{\mu}_i)=n\cdot\sum_{i=1}^k (\hat{\mu}_i-\hat{\mu})^2/(k-1) \] for the variance \(\sigma_e^2\) that only considers the dispersion of group means around the common mean and is independent of the dispersion of individual observations around their group mean.

On the other hand, our previous estimator \[ \hat{\sigma}_e^2 = \sum_{i=1}^k\sum_{j=1}^n (y_{ij}-\hat{\mu}_i)^2 / (N-k) \] also estimates the variance \(\sigma_e^2\) (Figure 4.1C). It only considers the dispersion of observations around their group means, and is independent of a general mean. For example, we could add a fixed number to each measurements in one group and this would not affect \(\hat{\sigma}_e^2\) (but would affect \(\tilde{\sigma}_e^2\)).

The two estimators have expectations \[ \mathbb{E}(\tilde{\sigma}_e^2) = \sigma_e^2 + \underbrace{\frac{1}{k-1}\,\sum_{i=1}^k n\cdot(\mu_i-\mu)^2}_{Q} \quad\text{and}\quad \mathbb{E}(\hat{\sigma}_e^2) = \sigma_e^2\;, \] respectively. Thus, while \(\hat{\sigma}_e^2\) is always an unbiased estimator of the residual variance, the estimator \(\tilde{\sigma}_e^2\) has bias \(Q\)—the between-groups variance but is unbiased precisely if \(H_0\) is true and \(Q=0\). If \(H_0\) is true, then the ratio \[ F = \frac{\tilde{\sigma}_e^2}{\hat{\sigma}_e^2} \sim F_{k-1,N-k} \] has an \(F\)-distribution with \(k-1\) numerator and \(N-k\) denominator degrees of freedom. The value of \(F\) deviates from this distribution the larger the term \(Q\) becomes, that is, the ‘further away’ the observed group means are from equality. The corresponding \(F\)-test thus provides the required test of the omnibus hypothesis \(H_0:\mu_1=\cdots =\mu_k\).

For our example, we estimate the four group means \(\hat{\mu}_1=\) 14.33, \(\hat{\mu}_2=\) 12.81, \(\hat{\mu}_3=\) 9.24, and \(\hat{\mu}_4=\) 9.34 and an overall mean \(\hat{\mu}=\) 11.43. Their squared differences are \((\hat{\mu}_1-\hat{\mu})^2=\) 8.42, \((\hat{\mu}_2-\hat{\mu})^2=\) 1.91, \((\hat{\mu}_3-\hat{\mu})^2=\) 4.79, and \((\hat{\mu}_4-\hat{\mu})^2=\) 4.36. With \(n=\) 8 mice per treatment group, this gives the two estimates \(\tilde{\sigma}_e^2=\) 51.96 and \(\hat{\sigma}_e^2=\) 1.48 for the variance. Their ratio is 35.17 and comparing it to a \(F\)-distribution with \(k-1=\) 3 numerator and \(N-k=\) 28 denominator degrees of freedom gives a \(p\)-value of \(p=\) 1.24\(\times 10^{-9}\), indicating strongly that the group means are not identical.

Perhaps surprisingly, the \(F\)-test is identical to the \(t\)-test for \(k=2\) treatment groups, and will give the same \(p\)-value. We can see this directly using a little algebra: \[ F = \frac{n\cdot((\hat{\mu}_1-\hat{\mu})^2+(\hat{\mu}_2-\hat{\mu})^2)/(2-1)}{\hat{\sigma}_e^2} = \frac{\frac{n}{2}\cdot \left(\hat{\mu}_1-\hat{\mu}_2\right)^2}{\sigma_e^2} = \left(\frac{\hat{\mu}_1-\hat{\mu}_2}{\sqrt{2}\sigma_e/\sqrt{n}}\right)^2=T^2\;, \] and \(F_{1,2n-2}=t^2_{2n-2}\). While the \(t\)-test uses a difference in means, the \(F\)-test uses the average squared difference from group means to general mean; for two groups, these concepts are equivalent.

4.2.2 Analysis of variance

Our derivation of the omnibus \(F\)-test made extensive use of a decomposition of the data into a between-groups and a within-groups component. We can exploit this decomposition further in the (one-way) analysis of variance (ANOVA) by directly partitioning the overall variation in the data via sums of squares and their associated degrees of freedom. In the words of its originator:

The analysis of variance is not a mathematical theorem, but rather a convenient method of arranging the arithmetic. (???)

The arithmetic advantages of the analysis of variance are no longer relevant today, but the decomposition of the data into various parts for explaining the observed variation remains an easily interpretable summary of the experimental results.

Partitioning the data

To stress that ANOVA decomposes the variation in the data, we first write each datum \(y_{ij}\) as a sum of three components: the grand mean, deviation of group mean to grand mean, and deviation of datum to group mean: \[ y_{ij} = \bar{y}_{\cdot\cdot} + (\bar{y}_{i\cdot}-\bar{y}_{\cdot\cdot}) + (y_{ij}-\bar{y}_{i\cdot})\;, \] where \(\bar{y}_{i\cdot}=\sum_j y_{ij}/n\) is the average of group \(i\), and \(\bar{y}_{\cdot\cdot}=\sum_i\sum_j y_{ij}/nk\) is the grand mean, and a dot indicates summation over the corresponding index.

For example, the first datum in the second group, \(y_{21}=\) 13.56, is deomposed into the grand mean \(\bar{y}_{\cdot\cdot}=\) 11.43, the deviation from group mean \(\bar{y}_{2\cdot}=\) 12.81 to grand mean \((\bar{y}_{2\cdot}-\bar{y}_{\cdot\cdot})=\) 1.38 and the residual within the \(D2\) group \(y_{21}-\bar{y}_{2\cdot}=\) 0.75.

Partitioning the sums of squares

We quantify the overall variation in the observations by the total sum of squares, the summed squared distances of each datum \(y_{ij}\) to the estimated grand mean \(\bar{y}_{\cdot\cdot}\). Following the partition of each datum, the total sum of squares also partition into two parts: the treatment (or between-groups) sum of squares, which measures the variation between group means, and the residual (or within-groups) sum of squares, which measures the variation of responses within each group: \[\begin{equation} \text{SS}_\text{tot} = \sum_{i=1}^k\sum_{j=1}^{n}(y_{ij}-\bar{y}_{\cdot\cdot})^2 = \underbrace{\sum_{i=1}^k n\cdot (\bar{y}_{i\cdot}-\bar{y}_{\cdot\cdot})^2}_{\text{SS}_\text{trt}} + \underbrace{\sum_{i=1}^k\sum_{j=1}^{n} (y_{ij}-\bar{y}_{i\cdot})^2}_{\text{SS}_\text{res}} \;. \tag{4.1} \end{equation}\] The intermediate term \(2\sum_{i}\sum_{j} (y_{ij}-\bar{y}_{i\cdot})(\bar{y}_{i\cdot}-\bar{y}_{\cdot\cdot})=0\) vanishes because \(\text{SS}_\text{trt}\) is based on group means and grand mean, while \(\text{SS}_\text{res}\) is independently based on observations and group means. Thus, the overall variation \(\text{SS}_{\text{tot}}\) decomposes into one part explained by the systematic differences between the treatments (\(\text{SS}_{\text{trt}}\)) and another part of ‘unexplained’ random variation within each group (\(\text{SS}_{\text{res}}\)).

For our example, we find a total sum of squares of \(\text{SS}_\text{tot}=\) 197.26, a treatment sum of squares \(\text{SS}_\text{trt}=\) 155.89, and a residual sum of squares \(\text{SS}_\text{res}=\) 41.37; as expected the latter two sum precisely to \(\text{SS}_\text{tot}\). Thus, most of the observed variation in the data is due to systematic differences between the treatment groups.

Partitioning the degrees of freedom

Associated with each sum of squares term is its degrees of freedom, the independent components used to calculate it. The total degrees of freedom for \(\text{SS}_{\text{tot}}\) are \(\text{df}_\text{tot}=N-1\), because we have \(N\) response values, and need to compute a single value \(\bar{y}_{\cdot\cdot}\) to find the sum of squares. The treatment degrees of freedom are \(\text{df}_\text{trt}=k-1\), because there are \(k\) treatment means, estimated by \(\bar{y}_{i\cdot}\), but the calculation of the sum of squares requires the overall average \(\bar{y}_{\cdot\cdot}\). Finally, there are \(N\) residuals, but we used up 1 degree of freedom for the overall average, and \(k-1\) for the group averages, leaving us with \(\text{df}_\text{res}=N-k\) degrees of freedom. Thus, \[ \text{df}_\text{tot} = \text{df}_\text{trt} + \text{df}_\text{res} \;. \] This decomposition tells us how much of the data we ‘use up’ for calculating each sum of squares component.

Mean squares

Dividing each sum of squares by its degrees of freedom gives the corresponding mean squares, which are exactly our two variance estimates. The treatment mean squares are given by \[ \tilde{\sigma}_e^2 = \text{MS}_\text{trt} = \frac{\text{SS}_\text{trt}}{\text{df}_\text{trt}} = \frac{\text{SS}_\text{trt}}{k-1} \] and are our first variance estimate based on group means and grand mean, while the residual mean squares \[ \hat{\sigma}_e^2 = \text{MS}_\text{res} = \frac{\text{SS}_\text{res}}{\text{df}_\text{res}} = \frac{\text{SS}_\text{res}}{N-k}\;, \] are our second independent estimator for the within-group variance.

In our example, we find \(\text{MS}_\text{res}=\) 41.37 / 28 = 1.48 and \(\text{MS}_\text{trt}=\) 155.89 / 3 = 51.96.

In contrast to the sum of squares, the mean squares do not decompose by factor and \(\text{MS}_\text{tot}=\text{SS}_\text{tot}/(N-1)=\) 6.36 \(\not=\text{MS}_\text{trt}+\text{MS}_\text{res}=\) 53.44.

Omnibus \(F\)-test

Our \(F\)-statistic for testing the omnibus hypothesis \(H_0:\mu_1=\cdots=\mu_k\) is then \[ F = \frac{\text{MS}_\text{trt}}{\text{MS}_\text{res}} = \frac{\text{SS}_\text{trt}/\text{df}_\text{trt}}{\text{SS}_\text{res}/\text{df}_\text{res}} \sim F_{\text{df}_\text{trt}, \text{df}_\text{res}}\;, \] and we reject \(H_0\) if the observed \(F\)-statistic exceeds the \((1-\alpha)\)-quantile \(F_{1-\alpha,\text{df}_\text{trt}, \text{df}_\text{res}}\).

Based on the sum of squares and degrees of freedom decompositions, we again find the observed test statistic of \(F=\) 51.96 / 1.48 = 35.17 on \(\text{df}_\text{trt}=\) 3 \(\text{df}_\text{res}=\) 28 degrees of freedom, corresponding to a \(p\)-value of \(p=\) 1.24\(\times 10^{-9}\).

ANOVA table

The results of an ANOVA are usually presented in an ANOVA-table such as Table 4.2 for our example.

An ANOVA table has one row for each source of variation, and the first column gives the name of each source. The remaining columns give (i) the degrees of freedom available for calculating the sum of squares (indicating how much of the data is ‘used’ for this source of variation); (ii) the sum of squares to quantify the variation attributed to the source; (iii) the resulting mean squares used for testing; (iv) the observed value of the \(F\)-statistic for the omnibus null hypothesis and (v) the corresponding \(p\)-value.

| Source | df | SS | MS | F | p |

|---|---|---|---|---|---|

| drug | 3 | 155.89 | 51.96 | 35.2 | 1.24e-09 |

| residual | 28 | 41.37 | 1.48 |

4.2.3 Effect size measures

The raw difference \(\Delta\) or the standardized difference \(d\) provide easily interpretable effect size measures for the case of \(k=2\) treatment groups that we can use in conjuction with the \(t\)-test. We now introduce three effect size measures for the case of \(k>2\) treatment groups for use in conjunction with an omnibus \(F\)-test.

A simple effect size measure for determining the importance of the treatment factor is the variation explained, which is the proportion of the factor’s sum of squares and the total sum of squares: \[ \eta^2_\text{trt} = \frac{\text{SS}_\text{trt}}{\text{SS}_\text{tot}} = \frac{\text{SS}_\text{trt}}{\text{SS}_\text{trt} + \text{SS}_\text{res}}\;. \] A large value of \(\eta^2_\text{trt}\) indicates that the majority of the variation is not due to random variation between observations in the same treatment group, but rather due to the fact that the average responses to the treatments are different; this would indicate a large treatment effect. In our example, we find \(\eta^2_\text{trt}=\) 155.89 \(/\) 197.26 \(=\) 0.79, confirming that the differences between drugs are responsible for 79% of the variation in the data.

A raw effect size is \[ b^2=\frac{1}{k}\sum_{i=1}^k(\mu_i-\mu)^2=\frac{1}{k}\sum_{i=1}^k\alpha_i^2\;, \] with \(\alpha_i=\mu_i-\mu\). It measures the average deviation from a group mean to the general mean.

A corresponding standardized effect size was proposed by Cohen (Cohen 1992): \[ f^2 = b^2/\sigma_e^2 = \frac{1}{k\sigma_e^2}\sum_{i=1}^k \alpha_i^2\;, \] It measures the average deviation between group means and general mean in units of residual variance, and specializes to \(f=d/2\) for \(k=2\) group. Extending from his classification of effect sizes \(d\), Cohen proposed that values of \(f>0.1\), \(f>0.25\), and \(f>0.4\) may be interpreted as small, medium, and large effects (Cohen 1992). It can be estimated as \[ \hat{f}^2 = \frac{\text{SS}_\text{trt}}{\text{SS}_\text{res}} = \frac{k-1}{N-k}\cdot F \;, \] where \(F\) is the observed value of the \(F\)-statistic, yielding \(\hat{f}^2=\) 3.77 for our example.

The two effect sizes \(f^2\) and \(\eta^2_\text{trtr}\) are translated into each other via \[\begin{equation} f^2 = \frac{\eta^2_\text{trt}}{1-\eta^2_\text{trt}} \quad\text{and}\quad \eta^2_\text{trt} = \frac{f^2}{1+f^2}\;. \tag{4.2} \end{equation}\] Much like the omnibus \(F\)-test, all three effect sizes quantify any pattern of group mean differences, and do not distinguish if each group deviates slightly from the overall mean, or if one group deviates by a substantial amount while the remaining do not.

4.3 Power analysis and sample size for omnibus \(F\)-test

We are often interested in determining the necessary sample size in a balanced design, such that the omnibus \(F\)-test reaches a desired power for a given significance level. Just as before, we need four out of the following five quantities for a power analysis: the two error probabilities \(\alpha\) and \(\beta\), the residual variance \(\sigma_e^2\), the sample size per group \(n\) (we only consider balanced designs), and a measure of the minimal relevant effect size (raw or standardized).

4.3.1 General idea

Recall that under the null hypothesis of equal treatment group means, the deviations \(\alpha_i=\mu_i-\mu\) are all zero, and the test statistic \(F=\text{MS}_\text{trt}/\text{MS}_\text{res}\) follows an \(F\)-distribution with \(k-1\) numerator and \(N-k\) denominator degrees of freedom.

If the treatment effects are not zero, then the test statistic follows a noncentral \(F\)-distribution \(F_{k-1, N-k}(\lambda)\) with noncentrality parameter \[ \lambda = n\cdot k\cdot f^2 = \frac{n\cdot b^2}{\sigma_e^2}= \frac{n}{\sigma_e^2}\cdot \sum_{i=1}^k \alpha_i^2\;. \] The noncentrality parameter is thus the product of the overall sample size \(N=nk\) and the standardized effect size \(f^2\) (which can be translated from and to \(\eta^2_\text{trt}\) via Eq. (4.2)). For \(k=2\), this reduces to the previous case since \(f^2=d^2/4\) and \(t^2_n(\eta)=F_{1,n}(\lambda=\eta^2)\).

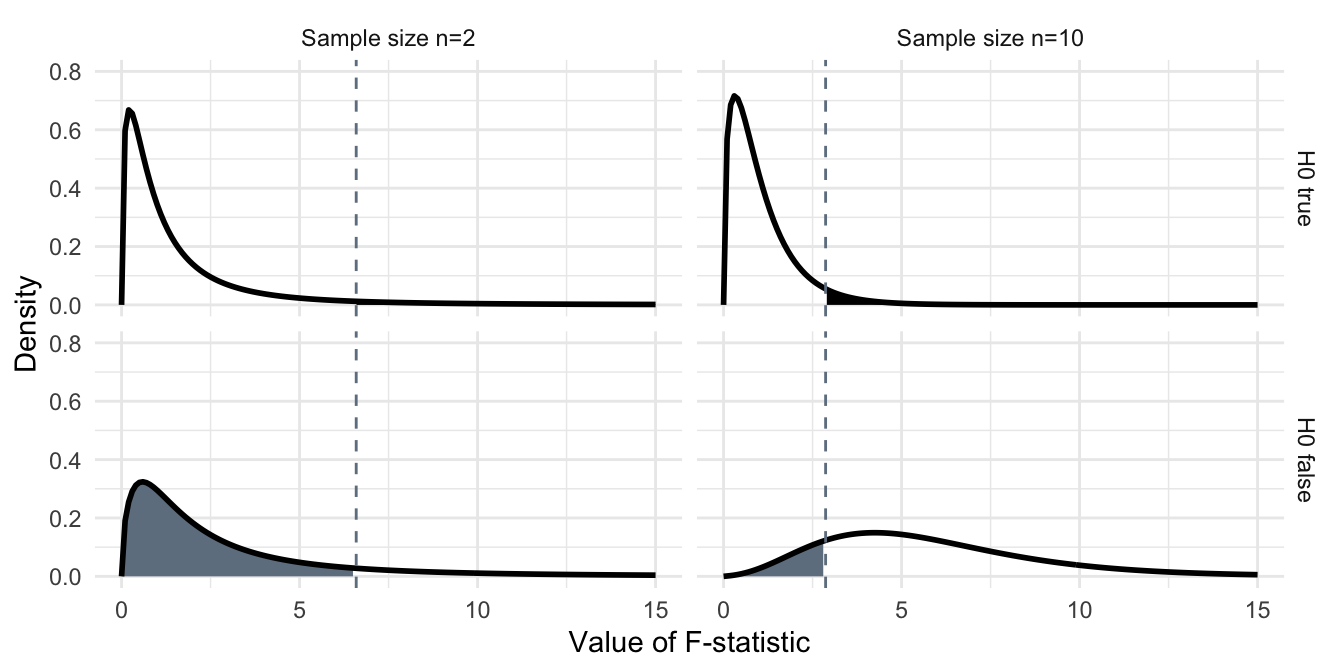

The idea behind the power analysis is the same as for the \(t\)-test. If the omnibus hypothesis \(H_0\) is true, then the \(F\)-statistic follows a central \(F\)-distribution with \(k-1\) and \(N-k\) degrees of freedom, shown in Figure 4.2(top) for two sample sizes \(n=\) 2 (left) and \(n=\) 10 (right). The hypothesis is rejected at the significance level \(\alpha=5\%\) (black shaded area) whenever the observed \(F\)-statistic is larger than the 95%-quantile \(F_{1-\alpha,\, k-1,\, N-k}\) (grey dashed line).

If \(H_0\) is false, then the \(F\)-statistic follows a noncentral \(F\)-distribution. Two corresponding examples are shown in Figure 4.2(bottom) for an effect size \(f^2=\) 0.34 corresponding, e.g., to a difference of \(\delta=2\) between first and second treatment group, with no difference between the remaining two treatment groups and the grand mean. We observe that this distribution shifts to higher values with increasing sample size, since its noncentrality parameter \(\lambda=n\cdot k\cdot f^2\) increases with \(n\). For \(n=\) 2, we have \(\lambda=2\cdot 4\cdot f^2=\) 2.72 in our example, while for \(n=\) 10, we already have \(\lambda=10\cdot 4\cdot f^2=\) 13.6. In each case, the probability \(\beta\) of falsely not rejecting \(H_0\) (a false positive) is the grey shaded area under the density up to the rejection quantile \(F_{1-\alpha,\, k-1,\, N-k}\) of the central \(F\)-distribution. For \(n=\) 2, the corresponding power is then \(1-\beta=\) 13% which increases to \(1-\beta=\) 85% for \(n=\) 10.

Figure 4.2: Distribution of F-statistic if H0 is true (top) with false positives (black shaded area), respectively if H0 is false and the first two groups differ by a value of two (bottom) with false negatives (grey shaded area). The dashed line indicates the 95%-quantile of the central F-distribution. Left: sample size n=2 right: sample size n=10.

4.3.2 Defining the minimal effect size

The number of treatments \(k\) is usually predetermined, and we then exploit the relation between noncentrality parameter, effect size, and sample size for the power analysis. A main challenge in practice is to decide upon a reasonable minimal effect size \(f_0^2\) or \(b_0^2\) that we want to reliably detect. Using a minimal raw effect size also requires an estimate of the residual variance \(\sigma_e^2\), e.g., from previous data or a dedicated preliminary experiment.

A simple method to provide a minimal effect size uses the fact that \(f^2\geq d^2/2k\) for the standardized effect size \(d\) between any pair of group means. The standardized difference \(d_0=\delta_0/\sigma=(\mu_{\text{max}}-\mu_\text{min})/\sigma\) between the largest and smallest group means therefore provides a conservative minimal effect size \(f^2_0=d_0^2/2k\) (Kastenbaum, Hoel, and Bowman 1970).

We can improve on the inequality for specific cases, and Cohen proposed three patterns with minimal, medium, and maximal variability of treatment group differences \(\alpha_i\), and provided their relation to the minimal standardized difference \(d_0\) (Cohen 1988, 276ff).

- If only two groups show a deviation from the common mean, we have \(\alpha_{\text{max}}=+\delta_0/2\) and \(\alpha_{\text{min}}=-\delta_0/2\) for these two groups, respectively, while \(\alpha_i=0\) for the \(k-2\) remaining groups. Then, \(f_0^2=d_0^2/2k\) and our conservative effect size is in fact exact.

- If the groups means \(\mu_i\) are equally spaced with distances \(\delta/(k-1)\), then the omnibus effect size is \(f^2=d^2/4\cdot(k+1)/3(k-1)\). For \(k=4\) and \(d=3\), an example is \(\mu_1=1\), \(\mu_2=2\), \(\mu_3=3\), and \(\mu_4=4\).

- If half of the groups is at one extreme \(\alpha_{\text{max}}=+d/2\) while the other half is at the other extreme \(\alpha_{\text{min}}=-d/2\), then \(f^2=d^2/4\) if \(k\) is even and \(f^2=d^2/4\cdot (1-1/k^2)\) if \(k\) is odd. Again for \(k=4\) and \(d=3\), an example is \(\alpha_1=\alpha_3=-1.5\), \(\alpha_2=\alpha_4=+1.5\). For \(\mu=10\), this corresponds to \(\mu_1=\mu_3=8.5\) and \(\mu_2=\mu_4=11.5\).

4.3.3 Calculating power

The built-in procedure power.anova.test() provides the necessary calculations for the omnibus \(F\)-test power analysis in R. This procedure accepts the number of groups \(k\) (groups=), the per-group sample size \(n\) (n=), the residual variance \(\sigma_e^2\) (within.var=), the power \(1-\beta\) (power=), the significance level \(\alpha\) (sig.level=), and a modified version of the raw effect size \(\nu^2=\sum_i \alpha_i^2/(k-1)\) (between.var=) as its arguments. Given any four of these parameters, it will calculate the remaining one.

We look at a range of examples to illustrate the use of power analysis in R. We assume that our previous analysis was completed and we intend to explore new experiments of the same type. This allows us to use the variance estimate \(\hat{\sigma}_e^2\) as our assumed within-group variance for the power analyses, where we round this estimate to \(\hat{\sigma}_e^2=\) 1.5 for the following calculations. In all examples, we set our false positive probability to the customary \(\alpha=5\%\).

Raw effect size \(b^2\)

From a minimal raw effect size \(b_0^2\), we find the corresponding between.var argument as

\[

\nu^2_0 = \frac{k}{k-1}\cdot b_0^2\;.

\]

For example, we might consider an effect with \(\alpha_1=\alpha_2=+1\) and \(\alpha_3=\alpha_2=-1\), such that \(D1\) and \(D2\) yield identically higher average enzyme levels, while \(D3\) and \(D4\) yield correspondingly lower enzyme levels. Then our raw effect size is \(b_0^2=(+1)^2+(+1)^2+(-1)^2+(-1)^2/4=1\) and we use between.var= 1.33 and within.var= 1.5 for our calculation. For a required power of 80%, this yields \(n=\) 5 mice per group. Using only \(n=\) 2 mice per group, we achieve a power of \(1-\beta=\) 21%.

Standardized effect size \(f^2\)

From a minimal standardized effect size \(f^2_0\), we find the corresponding between.var argument as

\[

\nu^2_0 = \frac{k}{k-1}\cdot\sigma_e^2\cdot f_0^2\;,

\]

but this formula defeats the purpose of a standardized effect size, since it explicitly requires the residual variance. The solution to this problem comes from noticing that since we measure effects in units of standard deviation, we can set \(\sigma_e^2=1\) in this formula and use it in conjunction with within.var=1 to achieve the desired result.

The standardized effect sizes of \(f^2_0=\) 0.01 (small), \(f^2_0=\) 0.06 (medium), and \(f^2_0=\) 0.16 (large) then translate to \(\nu_0^2=\) 0.02, \(\nu_0^2=\) 0.12, and \(\nu_0^2=\) 0.32, respectively, for a residual variance of \(\sigma_e^2=1\).

For a sample size of 10 mice per drug, these minimal effect sizes correspond to a power of 7%, 21%, and 50%, respectively, and to achieve a desired power of 80% would require 274, 45, and 18 mice per drug, respectively.

Range of group mean differences

For finding the required sample size based on a minimal group difference, we consider planning a follow-up experiment to detect a difference of at least \(\delta_0=\) 1 between the average enzyme levels of D1 and D2. With our assumed variance of \(\sigma_e^2=\) 1.5, this corresponds to a standardized effect size of \(d_0=\delta_0/\sigma=\) 0.82.

We use Cohen’s first pattern and set \(\alpha_1=\delta_0/2\), \(\alpha_2=-\delta_0/2\), \(\alpha_3=\alpha_4=0\), which yields a standardized effect size of \(f^2=\) 0.083, respectively \(\eta^2=\) 0.077. Using the formulas above, this corresponds to a between.var parameter of \(\nu_0^2=\) 0.17.

The required sample size for a power of 80% is 34 mice per group.

Variance explained \(\eta^2\)

To plan an experiment that yields \(\eta^2_\text{0,drug}=\) 20% of the variation explained by the differences in drugs, we proceed as for \(f_0^2\) and translate this effect size into the between.var argument by

\[

\nu_0^2 = \frac{k}{k-1}\cdot \frac{\eta^2_\text{0,drug}}{1-\eta^2_\text{0,drug}}\;,

\]

using \(\sigma_e^2=1\) here and as our within.var argument.

For \(k=\) 4 groups, this yields a parameter of \(\nu_0^2=\) 0.19 and we can detect this effect size with power \(1-\beta=\) 44% for a sample size of \(n=\) 10 per treatment group. Similarly, we require \(n=\) 20 mice per drug treatment to achieve a desired power of 80%.

Minimal detectable effect size

We can also find the minimal effect size detectable for a given sample size and power. For example, we might plan a similar experiment with the same four drugs, but we have only maximally 20 mice per drug available. Plugging everything in, we find a detectable between-groups variation of \(\nu_0^2=\) 0.29, corresponding to an effect size of \(f^2=\) 0.14 or \(\eta^2_{\text{drug}}=\) 13%.

4.3.4 Power analysis in practice

Power calculations are predicated on multiple assumption such as normally distributed response values and independence of observations, all of which might hold only approximately. The most severe uncertainty is the residual variance, which we usually base on an estimate from a previous experiment or an educated guess. Even though methods like power.anova.test() return the sample size \(n\) with deceptively precise six(!) decimals, it is therefore prudent to consider the uncertainty in the variance estimate and its impact on the resulting power and samples size and to allow for a margin of error.

A very conservative approach is to consider a worst-case scenario and use the upper confidence limit instead of the variance estimate for the power analysis. For our example, the residual variance is \(\hat{\sigma}_e^2\approx\) 1.5 with a 95%-confidence interval of [0.94, 2.74]. For \(k=\) 4 groups, a desired power of 80% and a significance level of 5%, detecting a raw effect of \(b_0^2=\) 0.12 (\(f_0^2=\) 0.08) requires 34 mice per group. Taking the upper confidence limit, the required sample size increases by a factor of roughly \(\text{UCL}/\hat{\sigma}_e^2\approx\) 2.74 / 1.5 = 1.8 to 61.

A more optimistic approach uses the estimated variance for the calculation and then adds a safety margin to the resulting sample size to compensate for uncertainties. The safety margin is fully at the discretion of the researcher and subject-matter knowledge might suggest a useful percentage. In practice, adding 20%-30% to the sample size seems to be reasonable in many cases and increases the sample size in our example from 34 to 40.

4.3.5 Portable power

A main complication for an exact power analysis for \(F\)-tests is the non-trivial dependence of the noncentrality parameter \(\lambda\) on the significance level \(\alpha\), the power \(1-\beta\), and the numerator and denominator degrees of freedom for the \(F\)-test in question. The portable power procedure exploits the fact that for the common signifiance level \(\alpha=5\%\) and a commonly desired power of \(1-\beta=80\%\), the noncentrality parameter changes comparatively little, allowing us to use a crude approximation for our calculations (Wheeler 1974). Such a procedure is very helpful in the early stages of planning an experiment, when all is needed are reasonably accurate approximations for sample sizes to gauge the practical implications of an experiment design.

The portable power procedure uses the quantity \(\phi^2 = \lambda / k\), which relates to effect size and sample size via \[ \phi^2 = n\cdot f^2\;. \] This quantity is reasonably well approximated by by \(\phi^2=5\) if we expect few (less than 10, say) denominator degrees of freedom and by \(\phi^2=3\) if we expect many such degrees of freedom. It becomes smaller with increasing number of treatment groups, but this dependency is often negligible in practice. A reasonable strategy is then to calculate the required sample size \(n=\phi^2/f^2\) assuming \(\phi^2=3\), and increase \(\phi^2\) to four or five if the resulting \(n\) is quite small.

We illustrate the procedure by revisiting two previous examples. Recall that for \(k=4\) and a minimal difference of \(\delta_0=\) 1, we found an effect size of \(f^2=\) 0.083. The exact power analysis for \(\alpha=5\%\) and \(1-\beta=80\%\) indicated a required sample size of 34. Assuming that the required sample size is sufficiently large, we approximate this analysis using \(\phi^2=\) 3, which yields a sample size of \(n=\phi^2/f^2=\) 3 / 0.083 \(\approx\) 36 and overestimates the exact value by about 7%.

Using again \(\alpha=5\%\) and \(1-\beta=80\%\), a sample size of \(n=\) 20 mice in each of four treatment groups showed a minimal detectable effect size of \(f^2=\) 0.14 using the exact calculation. Using the large sample approximation \(\phi^2=\) 3, the portable power calculation yields \(f^2=\) 0.15, an error of about 4%.

The portable power approximations are helpful for getting an idea if a necessary minimal effect size is within reach given our resources, and for finding a rough estimate of the minimal effect detectable given a specific experiment size. The approximation error is typically less than 35% and often considerably lower. Given that the variance is often much less precise, this magnitude of error should be acceptable for a rough calculation in most circumstances. Once a suitable design and sample size is identified, we should of course use the exact methods and an error margin to determine the final sample size.

4.4 Hasse diagrams, model formulae and linear regression

The analysis of variance has an intimate connection with classical linear regression and both methods are based on describing the observed data by a linear mathematical model. The analysis of much more complex designs becomes relatively straightforward when this connection is exploited and most statistical software will internally run a linear regression procedure for computing an ANOVA. While this relieves the practitioner from much tedious algebra, it still means that the appropriate linear model for an experimental design has to be correctly specified for the software.

The specification has two parts: first, the experimental design has to be translated into the linear model, such that the statistical inferences fully capture the logical structure of our experiment. And second, the linear model has to be translated into a model specification in the software. We can solve both problems by Hasse diagrams that visualize the logical structure of an experiment, and from which both the linear model formula and a symbolic representation of the model for the use in R can be derived with relative ease. We already saw some simple examples of these diagrams in Figures 2.4, 2.7 and 2.8. We now work out the connection between design, diagram, and model more systematically, and also briefly explore the connection of ANOVA and linear regression to enable correct interpretation of software output. Some of the following discussions still seem overly complicated for the relatively simple designs discussed so far, but are necessary for more complex designs in the following chapters.

4.4.1 Hasse diagrams of experiment structure

We introduce some additional terminology to be able to precisely describe the logical structure of an experiment.

Treatment, unit, and response structures

The treatment structure of an experiment describes the treatment factors and their relationships. In our drug example, the experiment has a single treatment factor Drug with four levels \(D1\), \(D2\), \(D3\), and \(D4\). Other designs use several treatment factors, and each applied treatment is then a combination of one level from each treatment factor.

The unit (or design) structure describes the unit factors and their relationships. A unit factor logically organizes the experimental material, and our experiment has a single unit factor (Mouse) with 32 levels, each level corresponding to one mouse. Unit factors are of several basic types: the smallest subdivision of the experimental material on which levels of a treatment factor are randomly assigned is called the experimental unit of this treatment factor; it provides the main contributions to the residual variance for testing the treatment factor. Units can be grouped together by a grouping factor, also known as a blocking factor or non-specific factor; these are of no direct inferential interest, but are used to remove variation from comparisons or take account of units in the same group being more similar than units in different groups. Finally, a unit factor can be intrinsic and describe a non-randomizable property of another unit factor; a common example is the sex of an animal, which we cannot deliberately choose (so it is not a treatment), but which we need to keep track of in our inferences.

Treatment factors are often fixed factors with pre-determined fixed levels, while unit factors are often random factors whose levels are a random sample from a population; in a replication of the experiment, the fixed factor levels would remain the same (we use the same four drugs again), while the random factor levels change (we do not use the same mice again). We denote treatment factors by an informative name written in bold and unit factors in italics; we denote random factors by parentheses: the treatment factor Drug is fixed for our experiment, while the unit factor (Mouse) is random.

The observations are recorded on the response unit factor and we only consider experiments with a simple response structure where a single value is observed on one unit factor in the design, which we denote by underlining.

When designing an experiment, the treatment structure is determined by the purpose of the experiment: what experimental factors to consider and how to combine factor levels to treatments. The unit structure is then used to accommodate the treatment structure and to maximize precision and power for the intended comparisons. In other words:

The treatment structure is the driver in planning experiments, the design structure is the vehicle. (Belle 2008, p180)

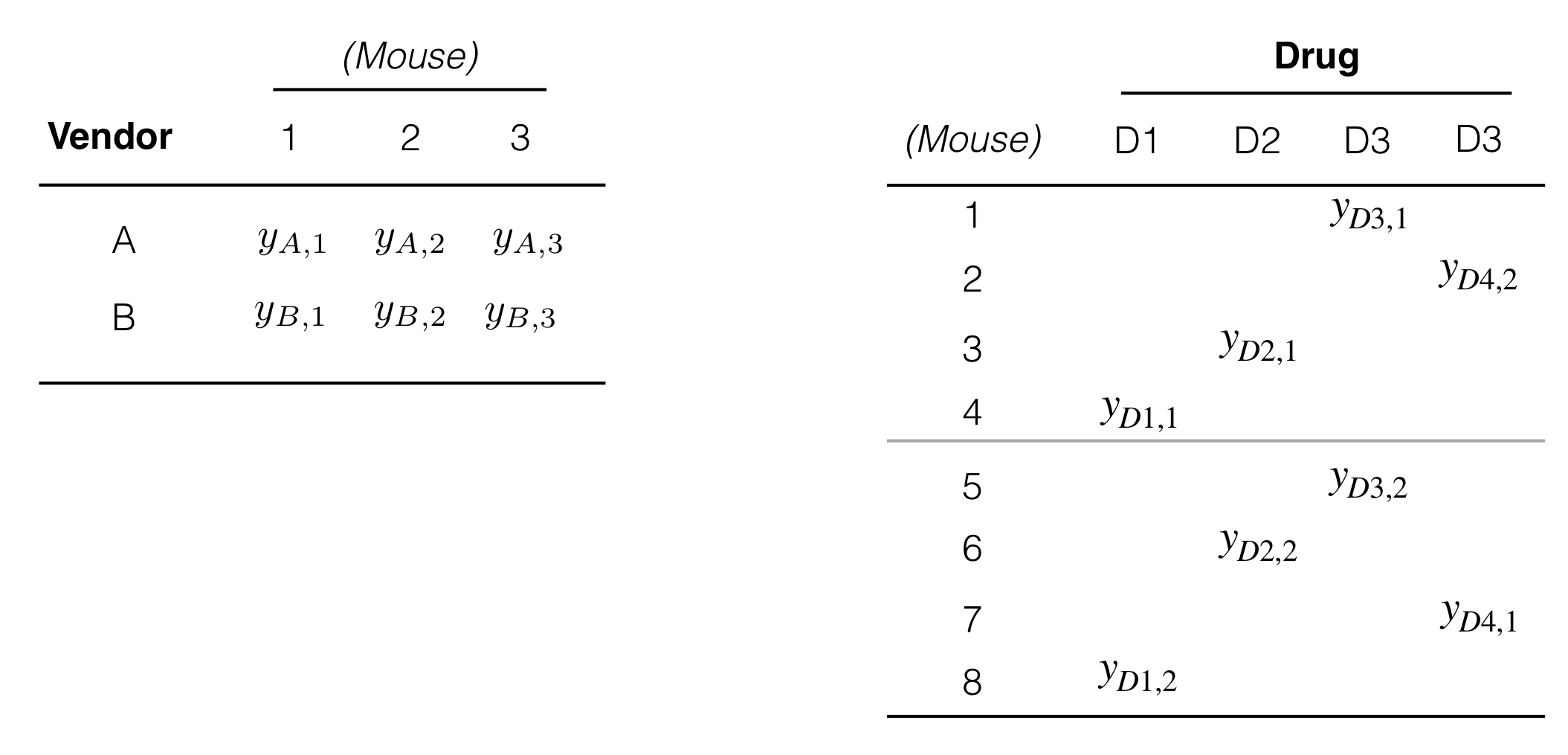

Figure 4.3: Examples of data layouts for crossed (left) and nested (right) factors.

The treatment and unit structures are created by nesting and crossing of factors. A factor A is crossed with another factor B if each level of A occurs together with each level of B and vice-versa. This implicitly defines a third interaction factor denoted A:B, whose levels are the possible combinations of levels of A with levels of B. In our paired design (Fig. 2.8) the treatment factor Vendor is crossed with (Mouse), since each kit (that is, each level of Vendor) is assigned to each mouse. We omitted the interaction factor, since it coincides with (Sample) in this case. The data layout for two crossed factors is shown in Figure 4.3(left); the cross-tabulation is completely filled.

A factor B is nested in another factor A if each level of B occurs together with one and only one level of A. For our current example, the factor (Mouse) is nested in Drug, since we have one or more mice per drug, but each mouse is associated with exactly one drug; Figure 4.3(right) illustrates the nesting for two mice per drug.

The experiment structure provides the logical arrangement of units and treatments. It is constructed by making the randomization of each treatment on its experimental unit explicit.

Constructing the Hasse diagrams

To emphasize the two components of each experimental design, we draw separate Hasse diagrams for the treatment and unit structures, which we then combine into the experiment structure diagram by considering the randomization. The Hasse diagram visualizes the nesting/crossing relations between the factors. Each factor is represented by a node, shown as the factor name. If a factor \(B\) is nested in factor a \(A\), we write \(B\) below \(A\) and connect the two nodes with an edge. The diagram is thus ‘read’ from top to bottom. If \(A\) and \(B\) are crossed, we write them next to each other and connect each to the next factor that it is nested in. We then create a new factor denoted \(A:B\), whose levels are the combinations of levels of \(A\) with levels of \(B\), and draw one edge from \(A\) and one edge from \(B\) to this factor. Each diagram has a top node called M or M, which represents the grand mean, and all other factors are nested in this top node.

We construct the experiment structure diagram as follows: first, we merge the two top nodes M and M of the treatment and unit structure diagram, respectively, into a single node M. We then draw an edge from each treatment factor to its experimental unit factor. If necessary, we clean up the resulting diagram by removing unncessary ‘shortcut’ edges: whenever there is a path \(A\)-\(B\)-\(C\), we remove the edge \(A\)-\(C\) if it exists since its nesting relation is already implied by the path.

We complete the diagram by adding the number of levels for each factor as a superscript to its node, and by adding the degrees of freedom for this factor as a subscript. The degrees of freedom for a factor \(A\) are calculated as the number of levels minus the degrees of freedom of each factor that \(A\) is nested in. The number of levels and the degrees of freedom of the top node \(M\) are both one.

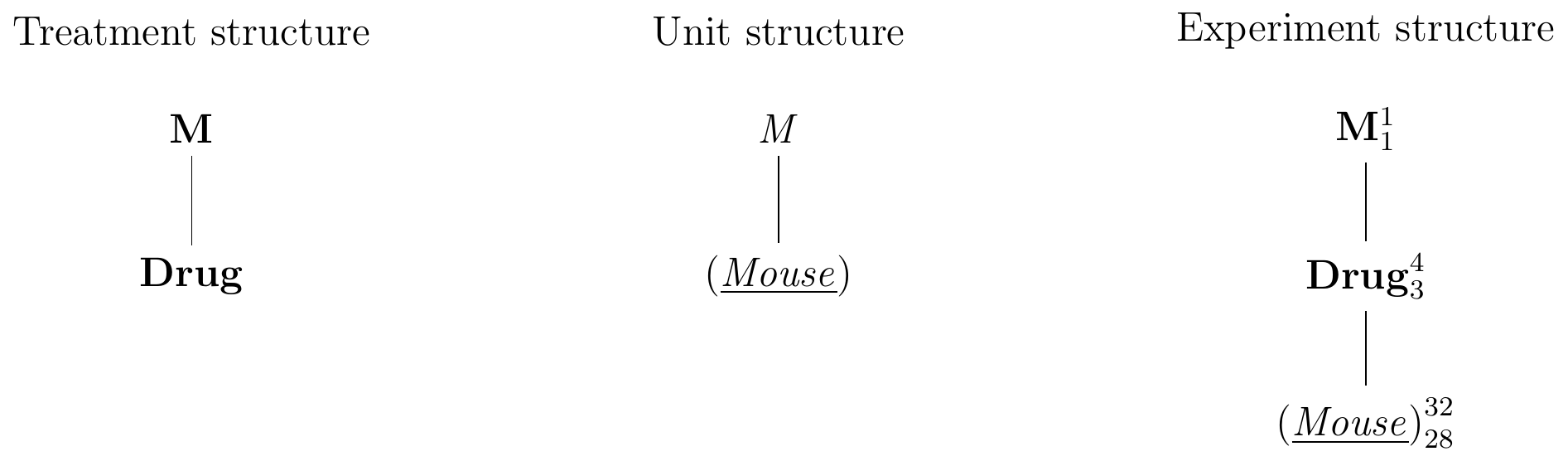

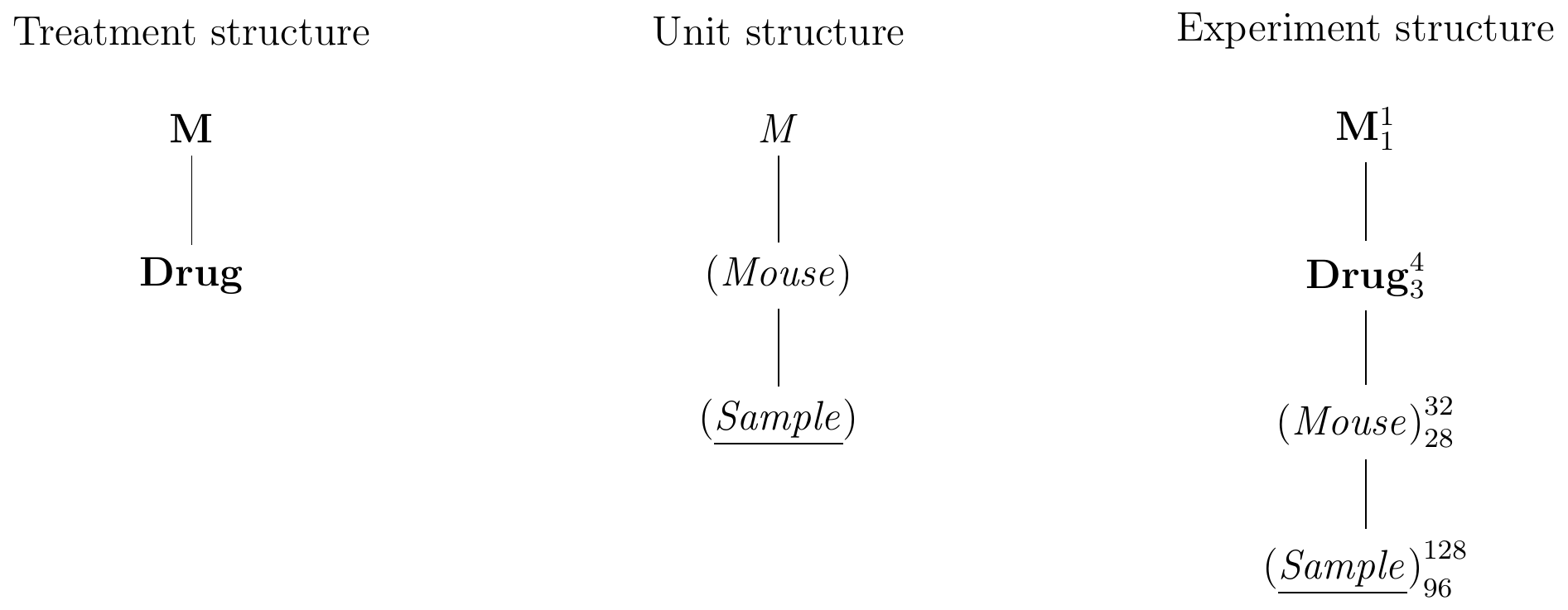

The Hasse diagrams our drug example are shown in Figure 4.4. The treatment structure contains the single treatment factor Drug, nested in the obligatory top node M (Fig. 4.4(left)). Similarly, the unit structure contains only the factor (Mouse) nested in the obligatory top node M (Fig. 4.4(center)).

To construct the experiment diagram, we merge the two top nodes M and M into a single node M. Both Drug and (Mouse) are now nested under the same top node. Since Drug is randomized on (Mouse), we write (Mouse) below Drug and connect the two nodes with an edge. This make the edge from M to (Mouse) redundant and we remove it from the diagram (Fig. 4.4(center)).

The superscripts are the number of factor levels: 1 for M, 4 for Drug, and 32 for (Mouse). The degrees of freedom for Drug are therefore \(3=4-1\), the number of levels minus the degrees of freedom of M. The degrees of freedom for (Mouse) are 28, which we calculate by subtracting from its number of levels (32) the three degrees of freedom of Drug and the single degree of freedom for M.

Figure 4.4: Hasse diagrams for treatment structure (left), unit structure (center), and experiment structure (right) for a completely randomized design for determining effect of four different drugs using 8 mice per drug and a single response measurement per mouse.

Hasse diagrams and \(F\)-tests

We can derive the omnibus \(F\)-test from the experiment diagram in Figure 4.4(center). The omnibus null hypothesis claims equality of the group means for the treatment factor Drug: this factor has four such means (given by the superscript), and the \(F\)-statistic has 3 degrees of freedom (given by the subscript). For any experimental design, we find the corresponding factor that provides the within-group variance \(\sigma_e^2\) in the diagram by starting from the factor tested and moving downwards along edges until we find the first random factor. In our example, this trivially leads to identifying (Mouse) as the relevant factor, providing \(N-k=28\) degrees of freedom for the \(F\)-denominator.

A example with subsampling

In biological experimentation, experimental units are frequently sub-sampled, and the data contains several response values for each experimental unit. In our example, we might still randomize the drug treatments on the mice, but take four serum samples instead of one from each mouse and measure them independently. Then, the mice are still the experimental unit for the treatment, but the serum samples now provide the response units. The Hasse diagrams in Figure 4.5 illustrate this design.

Figure 4.5: Completely randomized design for determining effect of four different drugs using 8 mice per drug and four samples measured per mouse.

The treatment structure is still identical to our previous example, and contains Drug as its only relevant factor. The unit structure now contains a new factor, (Sample), with 128 levels, one for each measured enzyme level. Since each sample belongs to one mouse, and each mouse has several samples, the factor (Sample) is nested in (Mouse). The observations are then partitioned first into 32 groups—one per mouse—and further into 128—one per sample per mouse. For the experiment structure, we randomize Drug on (Mouse), leading to the diagram in Figure 4.5(right).

The \(F\)-test for the drug effect again uses the variation for Drug on 3 degrees of freedom. Using our rule, we find that (Mouse)—and not (Sample)—provides the estimate of the variance for the \(F\)-denominator on 28 degrees of freedom. As far as this test is concerned, the 128 samples are technical replicates or pseudo-replicates. They do not reflect the biological variation against which we need to test the differences in enzyme levels for the four drugs, since drugs are randomized on mice and not on samples.

4.4.2 The linear model

For a completely randomized design with \(k\) treatment groups, we can write each datum \(y_{ij}\) explicitly as the corresponding treatment group mean and a random deviation from this mean: \[\begin{equation} y_{ij} = \mu_i + e_{ij} = \mu + \alpha_i + e_{ij}\;. \tag{4.3} \end{equation}\] The first model is called a cell means model, while the second, equivalent, model is a parametric model. If the treatments had no effect, then all \(\alpha_i=\mu_i-\mu\) are zero and the data are fully described by the grand mean \(\mu\) and the residuals \(e_{ij}\). Thus, the parameters \(\alpha_i\) measure the systematic difference of each treatment from the grand mean and are independent from the experimental units.

It is crucial for an analysis that the linear model fully reflects the structure of the experiment. The Hasse diagrams allow us to derive an appropriate model for any experimental design with comparative ease. For our example, the diagram in Figure 4.4(right) has three factors: M, Drug, and (Mouse), and these are reflected in the three sets of parameters \(\mu\), \(\alpha_i\), and \(e_{ij}\). Note that there are four parameters \(\alpha_i\) to produce the four group means, but given three and the general mean \(\mu\), the fourth parameter can be calculated; thus, there are four parameters \(\alpha_i\), but only three can be independently estimated given \(\mu\), as reflected by the three degrees of freedom for Drug. Further, the \(e_{ij}\) are 32 random variables, and this is reflected in the fact that (Mouse) is a random factor. Given estimates for \(\mu\) and \(\alpha_i\), the \(e_{ij}\) in each of the four groups must sum to zero and only 28 values are independent.

4.4.3 Analysis of variance in R

The aov() function provides all the necessary functionality for calculating complex ANOVAs and for estimating the model parameters of the corresponding linear models. It requires two arguments: data= indicates a data-frame with one column for each variable in the model, and aov() will use the values in these columns as the input data. The model itself is specified using a formulae in the formula= argument. This formulae describes the factors in the model and their crossing / nesting relationsships, and can be derived directly from the experiment diagram.

For our example, the data is stored in a data-frame called d which consists of 32 rows and three columns: y contains the observed enzyme level, drug the drug (\(D1\) to \(D4\)), and mouse the number of the corresponding mouse (\(1-32\)). Our data are then analyzed using the command aov(formula=y~1+drug+Error(mouse), data=d).

The corresponding formulae has three parts: on the left hand side, the name of the column containing the observed response values (y), followed by a tilde. Then, a part describing the fixed factors, which we can usually derive from the treatment structure diagram: here, it contains the special symbol 1 for the general mean \(\mu\) and the term drug encoding the four parameters \(\alpha_i\). Finally, the Error() part describes the random factors and is usually equivalent to the unit structure of the experiment. Here, it contains only mouse. An R formulae can often be further simplified; in particular, aov() will always assume a general mean 1, unless it is explicitly removed from the model, and will always assume that each row is one observation relating to the lowest random factor in the diagram. Both parts can be skipped from the formulae and are implicitly added; our formulae is thus equivalent to y~drug. We can read a formulae as an instruction: explain the observations in column y by the factors on the right: a general mean 1 / \(\mu\), the refinements drug / \(\alpha_i\) that give the group means when added to the general mean, and the residuals mouse / \(e_{ij}\) that cover the difference between group mean and actual observation.

The function aov() returns a complex data structure containing the fitted model. Using summary(), we can produce the ANOVA table in human-readable form:

| Df | Sum Sq | Mean Sq | F value | Pr(>F) | |

|---|---|---|---|---|---|

| drug | 3 | 155.9 | 51.96 | 35.17 | 1.237e-09 |

| Residuals | 28 | 41.37 | 1.478 |

Each factor in the diagram produces one row in the ANOVA table, with the expection of the trivial factor M. Moreover, the degrees of freedom correspond between diagram factor and table row. This provides a quick and easy check if the model formulae correctly describes the experiment structure. While not strictly necessary here, this is an important and useful feature of Hasse diagrams for more complex experimental designs.

From the Hasse diagrams, we construct the formulae for the ANOVA as follows:

- There is one variable for each factor in the diagram;

- all terms are added using

+; Radds the factor M implicitly, but we can also make it explicit by adding1;- if factors

AandBare crossed, we writeA*Bor equivalentlyA + B + A:B; - if factor

Bis nested inA, we writeA/Bor equivalentlyA + A:B; - the formulae has two parts: one for the random factors inside the

Error()term, and one for the fixed factors outside theError()term; - in most cases, the unit structure describes the random factors and we can use the unit structure diagram to derive the

Error()formulae and we can skip this part if the unit structure contains only a single random factor; - likewise, the treatment structure usually describes the fixed factors, and we can use this diagram to derive the remaining formulae.

In our sub-sampling example, the unit structure contains (Sample) nested in (Mouse) and we describe this nesting by the formulae mouse/sample. The formulae for the model is thus y~1+drug+Error(mouse/sample).

Finally, the diagram in Figure 2.8 gives the experiment structure for two vendor kits randomly assigned to two samples from each mouse. The factors Vendor and (Mouse) are now crossed and the formulae for the model is y~1+vendor+Error(mouse/sample), since the unit and treatment structures are identical to the sub-sampling example.

The aov() function does not directly provide estimates of the group means, and an elegant way of estimating them in R is the emmeans() function from the emmeans package1. It calculates the expected marginal means (sometimes confusingly called least-squares means) as defined in (Searle, Speed, and Milliken 1980) for any combination of experiment factors using the model found by aov(). In our case, we can request the estimated group averages \(\hat{\mu}_i\) for each level of drug, which emmeans() calculates from the model as \(\hat{\mu}_i = \hat{\mu} + \hat{\alpha}_i\):

This yields the estimates, their standard errors, degrees of freedom, and 95%-confidence intervals in Table 4.3.

| drug | emmean | SE | df | lower.CL | upper.CL |

|---|---|---|---|---|---|

| D1 | 14.33 | 0.43 | 28 | 13.45 | 15.21 |

| D2 | 12.81 | 0.43 | 28 | 11.93 | 13.69 |

| D3 | 9.24 | 0.43 | 28 | 8.36 | 10.12 |

| D4 | 9.34 | 0.43 | 28 | 8.46 | 10.22 |

4.5 Appendix

4.5.1 A simple function for calculating sample size

The following code illustrates the power calculations from Section 4.3. It accepts a variety of effect size measures (\(f^2\), \(\eta^2\), or \(\lambda\)), either the numerator degrees of freedom df1= or the number of treatment groups k=, the denominator degrees of freedom df2= or the number of samples n=, and the significance level alpha= and calculates the power of the omnibus \(F\)-test. This code is meant to be more instructional than practical, but its more versatile arguments will also allow us to perform power analysis for linear contrasts, which is not possible with the built-in power.anova.test(). For a sample size calculation, we would start with a small \(n\), use this function to find the power, and then increment \(n\) until the desired power is exceeded.

# Compute power of F-test

# n: sample size

# f2: Cohen's f^2 effect size

# lambda: noncentrality parameter

# eta2: eta-squared effect size

# k: number of groups

# df1: numerator degrees of freedom

# df2: denominator degrees of freedom

# alpha: desired significance level

#

# Need to provide EITHER f2 OR lambda OR eta2

# Need to provide EITHER k OR df1,df2

getPowerF = function(n, f2=NULL, lambda=NULL, eta2=NULL, k=NULL, df1=NULL, df2=NULL, alpha) {

# If necessary, calculate degrees of freedom

# from group size and sample size

if(is.null(df1)) df1 = k - 1

if(is.null(df2)) df2 = n*k - k

# Map effect size to ncp lambda=f2*n*k if necessary

if(is.null(lambda)) {

if(is.null(f2)) {

# Lambda from eta2

lambda = (eta2 / (1-eta2)) * n * (df1+1)

} else {

# Lambda from f2

lambda = f2 * n * (df1+1)

}

}

# Critical quantile for rejection under H0

q = qf(p=1-alpha, df1=df1, df2=df2)

# Power

pwr = 1 - pf(q=q, ncp=lambda, df1=df1, df2=df2)

return(pwr)

}4.5.2 Interpreting ANOVA model parameters

Internally, most statistical software uses a general linear regression routine for calculating an ANOVA. We briefly discuss how the linear model for the one-way ANOVA is translated into a regression model and how the resulting parameters must be interpreted.

The linear model for the one-way ANOVA is \(y_{ij} = \mu + \alpha_i + e_{ij}\) with \(i=1\dots k\) and \(j=1\dots n\). This model describes \(k\) group means \(\mu_1,\dots,\mu_k\) by \(k+1\) parameters \(\mu,\alpha_1,\dots,\alpha_k\) and is overparameterized. It is therefore not possible to estimate all five parameters independently, and any estimation requires an additional constraint (Nelder 1994,@nelder1998b). There are many options for this constraint, but the two most common ones are the treatment coding and the sum coding; both yield five (dependent) parameter estimates, but these estimates will differ in value and in interpretation. In general, these parameter estimates are usually not useful in themselves and are merely used as a vehicle to calculate estimates of the group means, for example. These latter estimates are independent of the specific coding.

The one-way ANOVA is formally equivalent to a linear regression model with regressors \(x_{1j},\dots,x_{kj}\) to encode the \(k\) group memberships: \[ y_{ij} = \mu + \alpha_1\cdot x_{1j} + \cdots + \alpha_k\cdot x_{kj} + e_{ij}\;, \] and sum and treatment codings use different values for the dummy variables \(x_{li}\).

Treatment coding

The treatment coding uses \(\hat{\alpha}_r=0\) for a specific reference group \(r\); \(\hat{\mu}\) then estimates the group mean of the reference group \(r\) and \(\hat{\alpha}_i\) estimates the difference between group \(i\) and the reference group \(r\). The vector of regressors for the response \(y_{ij}\) is

\[\begin{align*}

(x_{1j},\dots,x_{kj}) &= (0,\dots,0,\underbrace{1}_i,0,\dots,0) \text{ for } i\not= r \\

(x_{1j},\dots,x_{kj}) &= (0,\dots,0,\underbrace{0}_i,0,\dots,0) \text{ for } i= r\;.

\end{align*}\]

In R, the treatment groups are usually encoded as a factor variable, and aov() will use its alphabetically first level as the reference group by default. We can set the reference level manually using relevel() in base-R or the more convenient fct_relevel() from the forcats package. We can use the dummy.coef() function to extract all parameter estimates from the fitted model, and summary.lm() to see the estimated parameters together with their standard errors and \(t\)-tests.

For our example, aov() uses the treatment level \(D1\) as the reference level. This gives the estimates (Intercept) \(=\hat{\mu}=\) 14.33 for the average enzyme level in the reference group, which serves as the general mean, \(D1=\hat{\alpha_1}=\) 0 as expected, and the three differences from \(D2\), \(D3\), and \(D4\) to \(D1\) as \(D2=\hat{\alpha_2}=\) -1.51, \(D3=\hat{\alpha_3}=\) -5.09, and \(D4=\hat{\alpha_4}=\) -4.99, respectively. We find the group mean for \(D3\), for example, as \(\hat{\mu}_3=\hat{\mu}+\hat{\alpha}_3=\) 14.33 + -5.09 = 9.24.

Sum coding

The sum coding uses the constraint \(\sum_{i=1}^k \hat{\alpha}_i = 0\). Then, \(\hat{\mu}\) is the estimated general mean, and \(\hat{\alpha}_i\) is the estimated deviation of the \(i\)th group mean from this general mean. The vector of regressors for the response \(y_{ij}\) is

\[\begin{align*}

(x_{1j},\dots,x_{kj}) &= (0,\dots,0,\underbrace{1}_i,0,\dots,0) \text{ for } i=1,\dots,k-1 \\

(x_{1j},\dots,x_{kj}) &= (-1,\cdots,-1, 0) \text{ for } i=k\;. \\

\end{align*}\]

The aov() function uses the argument contrasts= to specify the desired coding for each factor, and contrasts=list(drug=contr.sum(4)) provides the sum encoding for our example. The resulting parameter estimates are (Intercept) \(=\hat{\mu}=\) 11.43 for the average enzyme level over all groups, and \(D1=\hat{\alpha_1}=\) 2.9, \(D2=\hat{\alpha_2}=\) 1.38, \(D3=\hat{\alpha_3}=\) -2.19, and \(D4=\hat{\alpha_4}=\) -2.09, respectively, as the specific differences from each estimated group mean to the estimated general mean. As required, the estimated differences add to zero and we find the group mean for \(D3\), as \(\hat{\mu}_3=\hat{\mu}+\hat{\alpha}_3=\) 11.43 + -2.19 = 9.24.

References

Belle, Gerald van. 2008. Statistical Rules of Thumb: Second Edition. https://doi.org/10.1002/9780470377963.

Cohen, J. 1988. Statistical Power Analysis for the Behavioral Sciences. 2nd ed. Lawrence Erlbaum Associates, Hillsdale.

Cohen, J. 1992. “A Power Primer.” Psychological Bulletin 112 (1): 155–59.

Kastenbaum, Marvin A., David G. Hoel, and K. O. Bowman. 1970. “Sample size requirements: One-way Analysis of Variance.” Biometrika 57 (2): 421–30. https://www.jstor.org/stable/2334851.

Nelder, J A. 1994. “The statistics of linear models: back to basics.” Statistics and Computing 4 (4). Kluwer Academic Publishers: 221–34. https://doi.org/10.1007/BF00156745.

Searle, S R, F M Speed, and G A Milliken. 1980. “Population Marginal Means in the Linear-Model - An Alternative to Least-Squares Means.” American Statistician 34 (4): 216–21.

Wheeler, Robert E. 1974. “Portable Power.” Technometrics 16 (2): 193–201. https://doi.org/10.1080/00401706.1974.10489174.